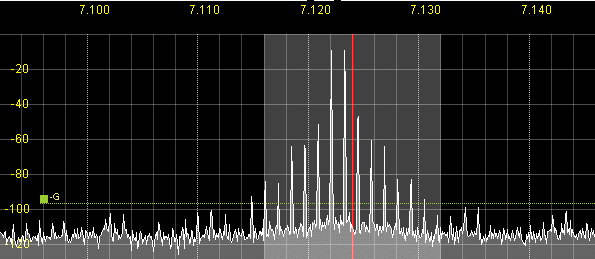

RF power amplifier design

While many electronics textbooks have a chapter devoted to RF

power amplifiers, and also several complete books about this matter

exist, I haven't yet found any that explains the whole topic in a way

that would enable an electronics hobbyist, or even a practically minded

professional circuit designer, to fully understand the matter and

achieve designs that work well and for a long time. Most books treat

this matter in a totally theoretical way, with excessive use of maths.

Practically-minded electronicians most commonly don't understand the

sort of mathematical language used in those books, while the

mathematicians and theoretically minded engineers who do understand

them are usually not the people who would actually design and build an

amplifier!

Many people have asked me to write an article about RF power

amplifiers, in a way that practical electronicians can understand. Also

over

many years I have come to acquire a better understanding of these

circuits, which often work in ways that are far less trivial than their

schematics look. Now, in 2020, confined at home due to the COVID-19 pandemic, time

has

come to write this article.

Most books about RF power amplifiers are mainly oriented

toward the cellphone market, so they place a big emphasis on UHF and

microwave amplifiers, often not even mentioning HF. For this reason I

will aim my article mainly at HF, extendable to VHF. In fact, as a

long-time radio amateur, I have ham radio applications in mind as my

primary

design objects. So this article is oriented mainly at power amplifiers

operating in the range of 1.8 to 54MHz, sometimes to 148MHz, in a power

range up to 1500W. I will include driver stage design down to the

milliwatt level.

This is a very long web page, almost long enough to be printed in book

form, and if you are really interested in the

matter, you will need to work through it step by step. It's organized

by background color: The main article has my traditional yellow

background, while accessory explanations are on blue background. If

you already know what I explain in such a Blue Block, you can skip

right over it and continue reading the main article.

I will consider the specific quirks of both BJTs and MOSFETs. Since

MOSFETs are far more common nowadays, I will draw MOSFETs in

all those situations that are applicable to both types of transistors.

Throughout this article, when I write "transistor" it means that

the matter described is applicable to both types, and I will talk about

source, drain and gate as a matter of standards, even when that could

be emitter, collector and base. When I write "BJT"

and "MOSFET" it means that the matter is specific to one type.

Totally obsolete devices, like vacuum tubes, aren't considered. And

YES, in

2020 tubes are indeed totally and fully obsolete, in the power and

frequency ranges considered in this article! So don't be mad at me, and

try not to shed any tears over this fact. Even BJTs are

basically obsolete at this time for RF power use, and most are no

longer being manufactured, but there is still a lot of equipment around

that

uses BJTs, so I'm including them.

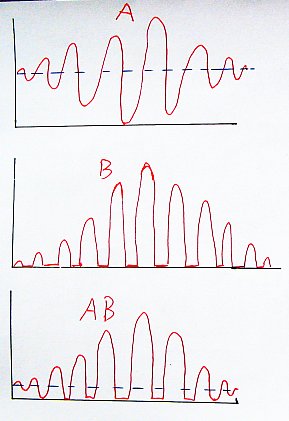

Classes

of amplifiers:

In

the beginning of electronics, shortly after Adam invented the vacuum

tube and Eve promptly criticized him for doing so, amplifiers were

divided into three

main

classes. Any amplifier in which the active device

conducted current all the time, throughout the entire signal

waveform, was called "class A". Any amplifier in which the active

device conducted current during exactly one half of the driving

signal's waveform, usually the positive half, was called "class B". And

any amplifier in which the active device conducted current during less

than one half the driving waveform was called "class C".

Soon

after doing this classification, the Adam of electronics noticed that

he had forgotten to consider all those amplifiers in which the active

device

conducts current during more than half of the signal's waveform, but

less than the whole time. Since this mode of operation falls between

class A and class B, and given that despite much desperate searching no

letter

could be found between those two, this operation mode was called "class

AB".

Electronicians

love to talk in angles. So the whole period of an RF cycle is

considered to have 360 degrees. For this reason one can say that

transistors operating in class A conduct over 360°, while in

class AB they conduct during less than 360° but more than 180°, in

class B they conduct exactly during 180°, and in class C they conduct

for less than 180° of the signal's period.

Since it

is very hard, or even impossible, to bias a transistor precisely to its

conduction threshold (which by the way is poorly defined!), so that it

conducts exactly

for 180°,

true class B amplifiers strictly don't exist! For that reason some

designers use the "class B" designation for any amplifiers that are close to true class

B, that is, amplifiers that operate in class AB but with a very small

idling current, so that they conduct for just slightly more than 180°.

I will call such amplifiers class AB, but if you read certain textbooks

and papers you need to know that my class AB could be called class B by

some authors.

A

theoretically perfect amplifier, using perfect components, would

achieve a maximum efficiency of 50% in class A and 78.5% in class B,

both at the limit of starting to cause distortion by clipping the

signal. A class C amplifier

has higher efficiency than that. The smaller its conduction angle is,

the

higher is its efficiency, but the lower is its output power. As the

conduction angle approaches zero, the efficiency approaches 100%, but

the power output approaches zero, so that's not very useful.

It is

often said that class A, AB and B amplifiers are linear, while class C

amplifiers are not. But this is incorrect! Firstly, all amplifiers have

residual nonlinearities that cannot be fully eliminated. Secondly, an

A, AB or B amplifier will turn dramatically nonlinear when overdriven,

and sometimes they are deliberately operated in this overdriven,

nonlinear mode.

And thirdly, and this is a point many people haven't realized, a class

C amplifier can be built to be linear! All it takes is devising a

biasing scheme that keeps the class C amplifier operating over a constant conduction

angle, even while the amplitude varies. But this

technique is rarely applied, so in practice most class C amplifiers are

indeed nonlinear.

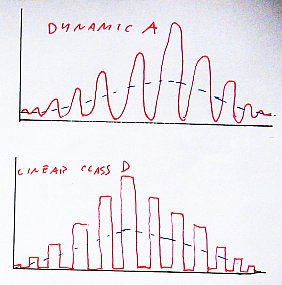

Long

after Adam had ceased doing electronics, more amplifier classes were

added, and these aren't based on conduction angle. And they are

usually

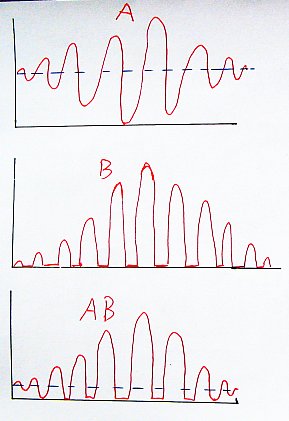

more efficient than the old ones. In class D amplifiers the transistors

are switched on and off, instead of being linearly driven. There are

two ways to implement a class D amplifier. In one of them the

transistors switch the output voltage,

which then attains a nearly square waveform, while the output current

is

defined

by the resonant circuit coming after the transistors, and is roughly

sinusoidal. In the other kind, the transistors switch the output current, resulting

in a nearly square current waveform, while the resonant circuit at

the output defines the voltage waveform, which becomes nearly

sinusoidal. The two types are called, appropriately, "voltage-switching

class D", and "current-switching class D" amplifiers.

In class

E amplifiers the transistors are also switched on and off, but a

resonant output network is used that shapes both the current and

voltage waveforms to be non-square, and this largely avoids

simultaneous voltage and current on the transistor, further improving

efficiency. If a transistor actually could be switched

instantaneously, class E wouldn't be necessary, as class D would be

perfect - but real transistors can't switch that fast. Class E

amplifiers basically work by the tuned output network largely

lifting the instantaneous load on the transistor during the

non-zero duration switching transitions, which enables getting very

high efficiency from relatively slow transistors.

Class F

amplifiers also use transistors in switching mode, but use a more

complex output network that treats the odd harmonics in one way, and

the even ones in another. This results in voltage and current waveforms

that optimize both the efficiency and the achievable power output, for

a given transistor.

In addition to these 7 basic amplifier classes, there are several more,

which have been defined by specific designers and companies, and

are often treated as intellectual property by them. Two different

companies might assign different class letters to basically the same

amplifier type.

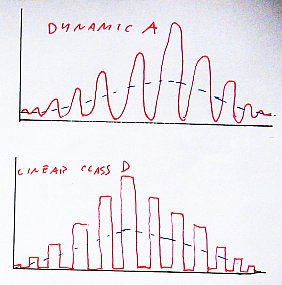

It should be noted that the definitions of the classic classes A to C,

and the

new kids D to F, overlap, because the classic ones are defined by

conduction

angle, and the modern ones by circuit layout and waveforms. Transistors

in class D, E and F amplifiers theoretically operate with 50% on, 50%

off time, and thus would be considered class B amplifiers by the old

definition, driven into saturation. But often in practice their

conduction angle is slightly different from 50%, and thus they

become either class C or class AB amplifiers by the old

definition. This is important to bear in mind, because many transistor

datasheets give circuit examples which the manufacturers claim to

operate in class AB, but that truly are operating in class D. The power

output and efficiency claimed by the manufacturers is very high,

typical for class D,

but people reading the datasheets and noticing the "class AB" claim

will mistakenly believe that the transistor described can deliver that

high

power and efficiency while operating linearly! This has led many ham experimenters to

blowing up expensive MOSFETs by operating them at unrealistically high

power levels in linear class AB, trying to achieve the same power that

the manufacturer claims for the nonlinear class AB / class D

hybrid test circuit.

RF power

amplifiers are best designed backwards. that is, you start

at the output side, and then continue toward the input side. When

designing a multistage amplifer, you start with the final stage, then

continue to the driver. Keeping with this logic, let's start looking at

output circuits for various types of amplifiers.

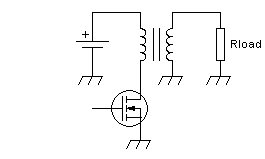

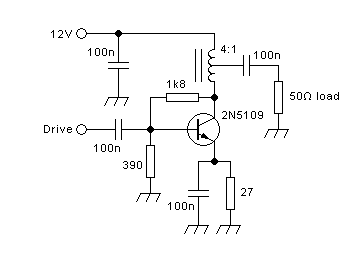

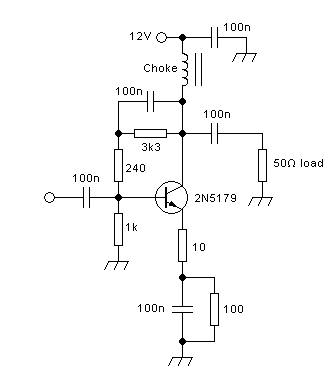

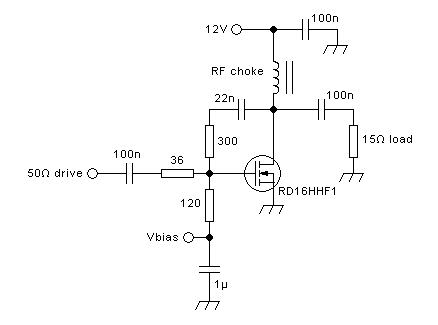

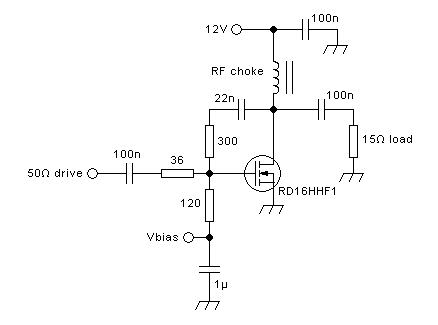

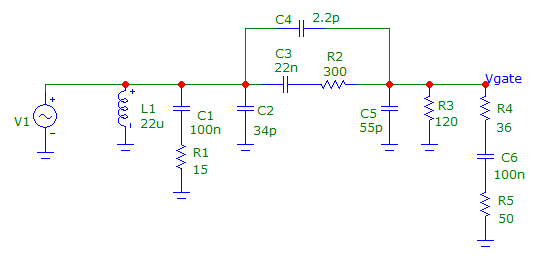

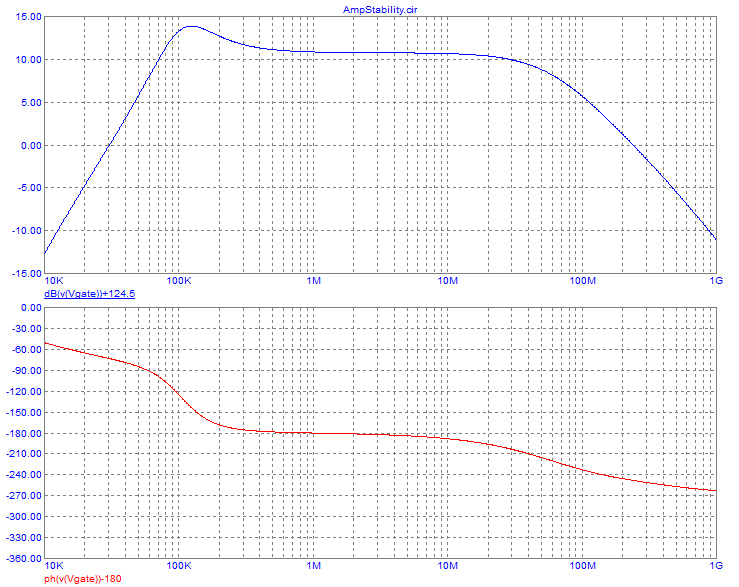

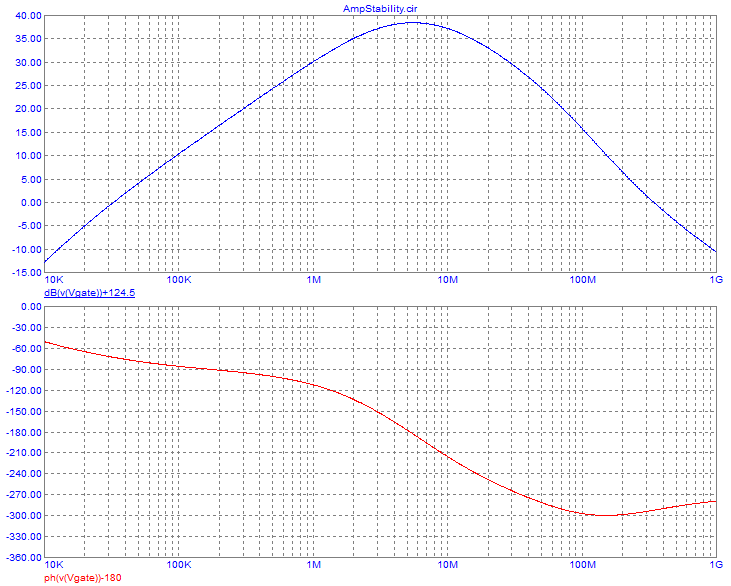

Single-transistor broadband amplifiers:

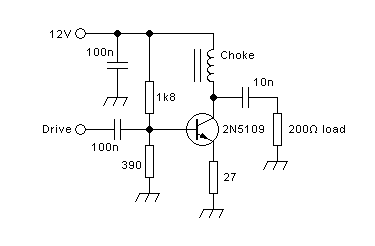

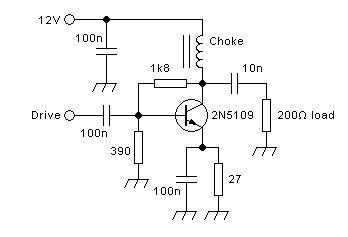

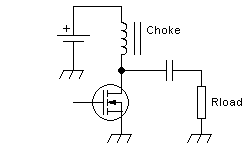

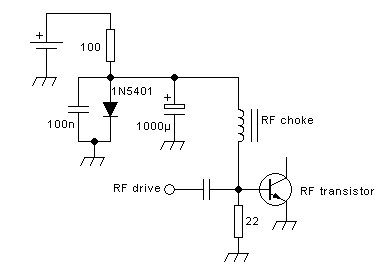

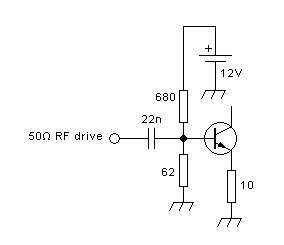

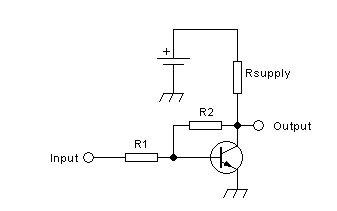

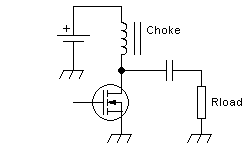

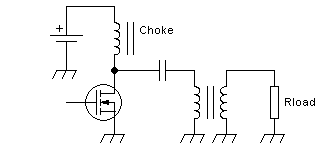

This kind of amplifier is usually operated in class A. There

is a constant supply current flowing through a choke. The

transistor conducts more or less of this current to ground, as

commanded by the drive signal. The remainder of the current is forced

to go through the load resistance. A coupling capacitor

allows the RF current to flow, while blocking the supply voltage.

In all circuits on this page, the battery represents a perfect, stable

voltage source. In a practical amplifier there wouldn't be a real

battery, of course, but a combination of bypass capacitors, typically

fed from a regulated power supply, sometimes through an

additional choke. And the gate, drawn open

here, obviously needs to be connected to a circuit that applies a DC

bias and an RF drive voltage.

Since a choke cannot have any DC voltage across it, the supply

voltage appears in full at the drain of the transistor. When the

transistor is

driven harder during the positive half cycle, it conducts more current

and thus temporarily pulls its drain voltage down. The additional

current flowing

in the transistor at that time comes from the capacitor, thus drawing a

negative current through the load resistor. Likewise, when the

transistor is being driven softer, during the negative half of the

driving waveform, it conducts less current than what's coming through

the choke, and the surplus must flow through the capacitor and

resistor. That's a positive current in the load resistor, and the

voltage at the drain becomes higher than the supply voltage.

In normal

operation, a typical RF power transistor can pull its drain voltage down to

about one tenth of the supply voltage. If the drive signal is

symmetric, and the transistor is linear and all

goes as it should, then the output signal will also be symmetric,

undistorted, and will reach a peak value equal to 90% of the supply

voltage. Thus the peak-to-peak

output voltage can reach 1.8 times the supply voltage, and since RMS

voltage is the peak-to-peak value divided by 2√2, that's an

RMS voltage of about 0.64 times the supply voltage. This is the

maximum effective undistorted RMS voltage that can appear on the load.

And since power is RMS voltage squared and then divided by load

resistance, it's easy to calculate the power available on the load. For

example, if the supply provides 12V, and the load resistor is 10Ω, then

we get 21.6Vp-p on the load, which is roughly 7.6Vrms, and on 10Ω that

makes roughly 5.8W. Note that the exact value depends on the actual

saturation voltage of the transistor, which changes according to

transistor type and operating conditions. The 10% saturation voltage I

assumed here is

typical, but individual situations can stray considerably from this

value.

The DC current in the choke will be determined by the transistor's

biasing. To obtain correct, undistorted operation, it's necessary that

the transistor never runs out of drain current, and thus it has to be

biased

to a DC current larger than the peak signal current. That's class A

operation. Since this peak signal current is roughly 0.9 times the

supply voltage divided by the load resistance, in the example above it

is 1.08A, and you would probably fare well by biasing this transistor

to some DC value between 1.2 and 1.5A.

If you need a different output power, and the supply voltage is given,

then the only item you can adjust to suit that requirement is the load

resistance. But typically there is a requirement to make the amplifier

work into a specific

load resistance too! So it's most usual that some transformation of the

load resistance is required. This could be done by means of a

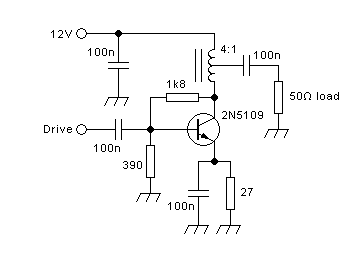

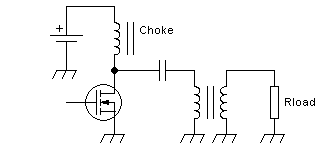

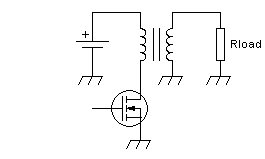

transformer inserted between the amplifier and the load, like this:

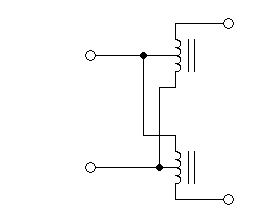

The transformer can be of the conventional type, as shown in this

drawing, or it could be a transmission line transformer. In a

conventional transformer, the turns ratio is equal to the

voltage ratio, inversely proportional to the current ratio, and

equal to the square root of the resistance or impedance ratio. For

this reason it's important to always make sure you understand whether a

transformer said to have a certain ratio, such as 1:4, is meant to have

a turns ratio and voltage ratio of 1:4, or an impedance ratio of 1:4.

In RF practice it's more common to mean the impedance ratio, while low

frequency electronicians almost invariably talk

in terms of turns ratio, and some engineers coming from the

low

frequency world carry this over into the RF world. And since a

transformer with a 1:4 turns ratio has a 1:16 impedance ratio, the

difference is indeed

important!

Let's assume that you want an output power of 30W from your 12V-powered

amplifier, and that you need to provide this power to a 50Ω load, which

is the most usual load resistance in RF power electronics. Multiply the

power times the load resistance, take the square root of the result,

and you get the RMS

voltage. In this case, that's 38.73Vrms. But your transistor's drain

still can only swing linearly over a voltage range that gives

7.64Vrms. So you need a transformer having a voltage ratio

and turns ratio of 1:5.07. In practice 1:5 will be close enough. That

transformer has a 1:25 impedance ratio, and so your 50Ω load resistance

gets transformed down to just 2Ω of load resistance on the transistor!

The peak current produced by your 10.8V maximum peak voltage into that

2Ω load is 5.4A, and so you will need to bias your class A amplifier

for at least 6, perhaps 7A DC, to get largely undistorted operation. At

12V

that's 72 to 84W of continuous input power, for barely 30W peak output

power.

That's a lousy efficiency, and this is the reason why such class A

amplifiers

are rarely used at power levels higher than a few watt. But they are

nice and simple and produce low distortion, which makes them useful at

low power levels.

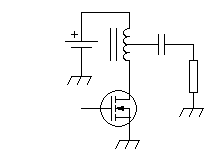

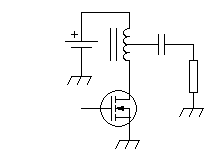

In fact they get much simpler and more economical by designing the

transformer to be able to handle the amplifier's DC current, thus

taking over the choke's function, allowing us to eliminate the choke

and

the capacitor:

In fact this is the way many such amplifiers are built.

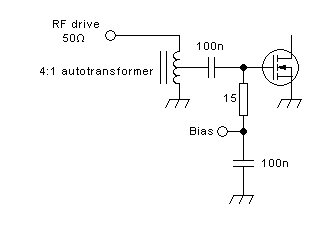

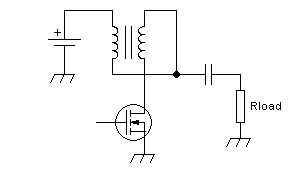

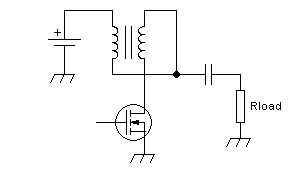

When the turns ratio required is close enough to 1:2 or 2:1, the

transformer's

size and cost can be shrunken quite effectively by using an

autotransformer:

Since the autotransformer provides no galvanic insulation, the coupling

capacitor again becomes necessary. But the small autotransformer has

better coupling and lower parasitics, and thus gives a better frequency

coverage.

This autotransformer is often made with a bifiliar winding, and then

it's often drawn in this way:

It's simply the same thing drawn differently. Some authors claim that

these bifiliar transformers are transmission line transformers, but

that's a matter of debate and definitions. And so it's time for the

next Blue Block:

Transformers:

Conventional, transmission line, real and fake ones

A conventional transformer consists of two or more windings that share

a single magnetic core. Any magnetic field caused by current flowing in

one winding will induce a voltage in all windings. This

allows a transformer to transfer energy from one winding to the others,

and by making windings with different turn numbers, it can also convert

one combination of voltage and current into a totally different one,

keeping the power constant except for some slight losses. It is a very

versatile device, that found many applications in electricity and

electronics even before Adam invented the vacuum tube. But it has

important drawbacks too, called "parasitics". And the big problem for

us, when building RF power amplifiers, is that in RF power applications

these parasitics are particularly nasty.

All real-world conventional transformers have less than perfect

coupling between their windings. This shows up in their behavior as if

there were additional inductors connected in series with their

windings, these little inductors having their own separate magnetic circuits,

being uncoupled to the main transformer. As the frequency gets higher,

this "leakage inductance" ends up making a transformer unusable. In

most RF power applications, leakage inductance is what determines the

upper frequency limit at which a conventional transformer can work well

enough. It is possible to reduce the leakage inductance by intimately

mingling all windings, for example by using twisted wires to wind all

windings together, but this increases another parasitic, which is

the capacitance between windings. There is no free lunch here,

only a chance to make the best compromise.

The allowable wire length of a winding is limited by the simple reason

that current only flows at a speed close to the speed of light. The

wire length has to be much smaller than the wavelength, for the

transformer to function normally. At HF this can already start to

become a problem, while at VHF it's a serious problem. And even in HF

amplifiers, transformers are often expected to carry non-sinusoidal

signals, which means that there are harmonics present that reach high

into the VHF range, and even into UHF. So, winding wire length can

become a critical factor even at HF.

The larger a conventional transformer is, the higher are these

parasitics. But in high power applications we need rather large

transformers, to handle the high voltages and currents, so this is bad

news, and ultimately limits the applicability of conventional

transformers in RF power amplifiers.

Transmission line transformers instead work on a completely different

principle: One or more transmission lines of a properly chosen

impedance, carrying RF energy from the input side to the output side,

and having something on them that makes the output side not "see" the

input side, except through the line proper. This "something" is usually

a magnetic core on which the

transmission line is wound, which makes the line act as a common-mode

choke: Any differential current on the two conductors can pass freely,

while all common-mode current is largely blocked by the inductance or

impedance of the line wound on the core.

The transmission lines can be coaxial cable, parallel line, twisted

pairs, etc. Some people draw transmission line transformer sections as

coax conductors with a core, or twisted lines with a core, or even two

windings on a core. The core is sometimes drawn using the standard

schematic symbol, but some designers prefer to physically draw a toroid

around the line. There is much creativity in this area of electronics

art. I prefer to draw them either as twisted line or as coax line, with

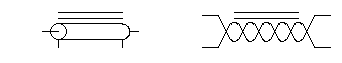

a symbolized core, like this:

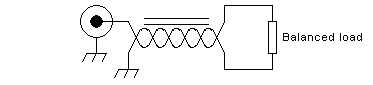

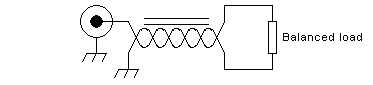

A single transmission line on a single core can be used as a balun: One

end of the line has one conductor grounded, the other conductor

connected to an unbalanced signal source. The other end of the line has

the same signal voltage between its conductors, at the same signal

current, but is no longer ground-referenced. It's a floating,

differential signal source. It can be used to drive a balanced load:

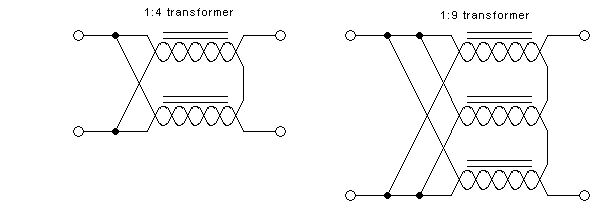

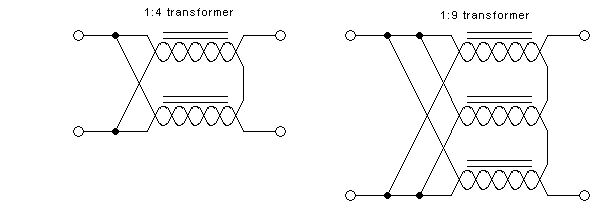

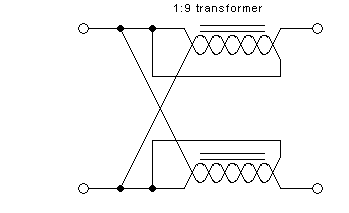

What's more fun is that two such transmission lines, each of them wound

on its own core, can be connected with their inputs in parallel and

their outputs in series, thus implementing a transformer with a 1:4

impedance ratio. This principle can be extended to three lines and

cores for a 1:9 transformer, and so on. These transformers can be

freely used with balanced or unbalanced circuits on each side, since

they all also provide balun functionality.

The beauty about these transmission line transformers is that they

almost don't have a practical upper frequency limit. Even when the

transmission lines are long relative to the wavelength, they are all

the same length, and so the output signals from all of them are in the

same phase, allowing series connection of the line outputs.

Whenever the transmission lines used to make such transformers have an

appreciable length, relative to the wavelength of the highest

frequency at which we want them to work, they need to have the

same impedance as the signals they are carrying. For example, if the

1:4 transformer shown above is to be used to match a 12.5Ω source to a

50Ω load, then each of the two transmission lines needs to have a 25Ω

impedance. The two line inputs in parallel will match to the 12.5Ω

source, and the two line outputs in series will match to the

50Ω

load. In many practical situations it will be necessary to construct

transmission lines of the proper, specific impedances. In other cases

it may be necessary to wind such transmission line transformer

elements with two or more coax cables and connect them in

parallel, to achieve a low impedance. For higher impedances it will be

necessary to use parallel transmission lines. And transformers for

extremely high or low impedances can be hard or impossible to

make.

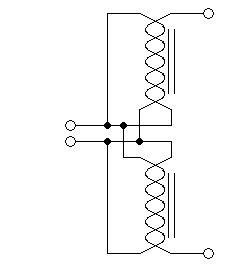

In some

situations, when the maximum operating frequency is low enough so that

the line length is very short compared to the wavelength, one can save one transmission

line and its core, by connecting the line outputs in series with the

signal input:

This configuration gets many different names, among them "bootstrapped

transmission line transformer", "false transmission line transformer",

"non-equal delay transmission line transformer", among various

others. The point to keep in mind is that this kind of configuration

does have a strict upper frequency limit, given by the requirement that

the transmission lines be very short relative to

the wavelength. The balun effect is also lost.

This circuit could be redrawn in this way, for improved clarity:

And many people claim that this is nothing else than a combination of

two autotransformers,

which could also be drawn like this:

So, it is important to remember one very important point: When you see

a combination of transmission lines wound around cores, in which each

line's end is connected either

to the input side or

the output side, it's a true transmission line transformer that has

a very wide frequency range. Instead if you see lines that

have the two poles of the same

end connected to the input and

output circuit,

then it's not

a true transmission line transformer, but one of those

contraptions that suffer from the same wire length limitations as

conventional transformers do.

Single-transistor tuned amplifiers:

Instead of using a choke to feed the supply current into the amplifier,

and use transformers to adapt the load impedance, it's possible to do

both tasks using tuned circuits. The disadvantage of this approach is

obvious: The amplifier will only be usable inside a relatively narrow

frequency range. But in many cases this is acceptable and even

desirable, for example in single-band radios. And there are

various advantages in using tuned circuits: They typically don't need

ferrite cores, which eliminates some cost and weight, and also does

away with all the characteristic problems of ferrite materials, such as

losses, DC saturation and nonlinearities.

The narrow-banded nature of tuned circuits makes them suppress

harmonics, and this allows operating these amplifiers in classes other

than class A. Tuned linear amplifiers can be easily built using a

single transistor, with far better efficiency than class A broadband

amplifiers. And class C tuned amplifiers, while typically nonlinear,

can easily achieve practical efficiencies of roughly 80%.

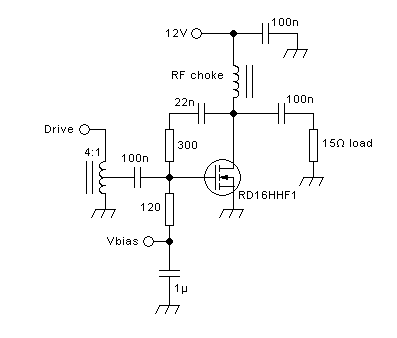

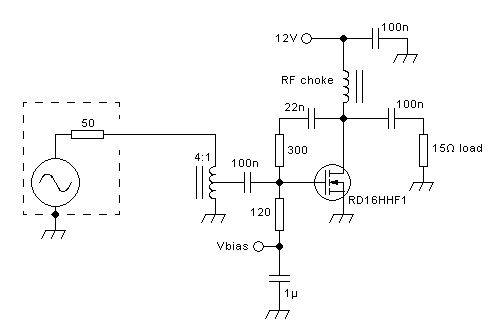

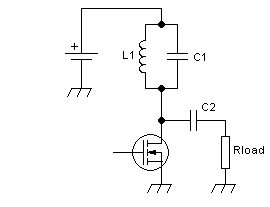

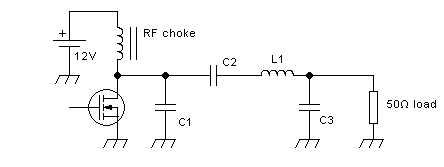

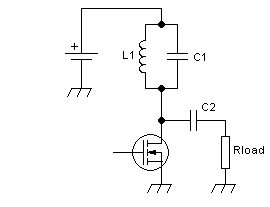

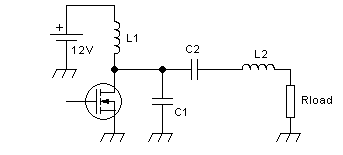

The basic single-transistor tuned amplifier's output section looks like

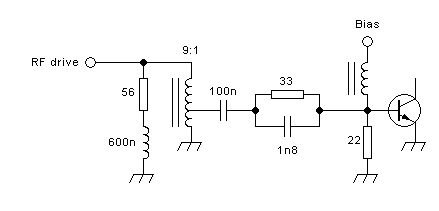

this:

As you have immediately spotted, it's the same basic circuit as the

broadband one, except that the choke has been replaced by a parallel

tuned circuit. This tuned circuit is resonant at the operating

frequency, or more typically at the center of the desired operating

frequency range. It has a Q factor suitably selected to meet several

goals: Higher Q leads to smaller bandwidth, better harmonic

suppression, smaller inductor, larger capacitor, higher loss and more

critical tuning. So the Q is a compromise, but many designers tend to

use a loaded Q of roughly 3 in a typical situation. This means that at

the operating frequency both L1 and C1 have one third as

much reactance as the resistance value of the load.

The inductive reactance of a coil is

Xl = 2 π F L

and the

capacitive reactance of a capacitor is

Xc = 1 ÷

(2 π F C)

where of

course F is the frequency in hertz, L the inductance in henry, C the

capacitance in farad, Xl the inductive reactance in ohm, Xc

the

capacitive reactance in ohm, and π is roughly 3.1416.

It's very usual to connect C1 from the drain to ground rather than in

parallel to L1. It works exactly the same, because the top end

of L1 is grounded at RF through the battery, or in a

practical circuit through the bypass capacitors installed at that

location. The circuit then would look like this:

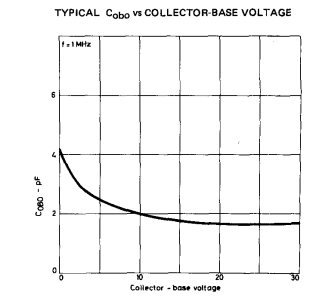

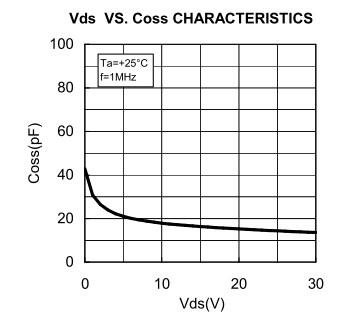

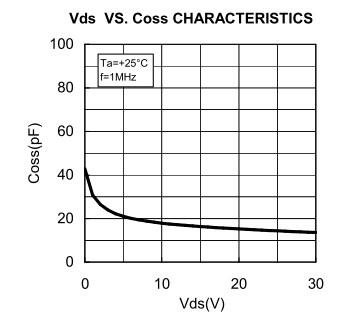

As you can see, C1 ends up directly in parallel with the

transistor's output side, which means that the transistor's output

capacitance, if significant, needs to be subtracted from the value you

use for C1. This is true just the same if C1 is placed in parallel to

L1, by the way.

The relationships between supply voltage, drain voltage, load

resistance and output power are exactly the same as in the broadband

amplifier. But tuned amplifiers are typically biased to a much

lower standby current, operating in class AB, or even to no standby

current at all, operating in class C. It's interesting to understand

what currents will flow in such a case: The resonant circuit

L1/C1,

if it has a high enough loaded Q, will always force a nearly sinusoidal

voltage at the drain, and at the same time can source or sink any

instantaneous current needed to achieve this. This is a very different

behavior from that of the choke in the broadband amplifier, which

delivers a constant current over the RF cycle, regardless of the drain

voltage waveform. We can also think of this in the frequency domain:

While a choke has a high impedance over the whole RF spectrum of

interest, a resonant circuit has a high impedance at the fundamental

frequency, but low impedance at its harmonics and all other out-of-band

frequencies .

Whenever

the transistor is driven on, it will draw a current from the load and

the resonant circuit, defined either by the instantaneous gate voltage

(while in active range) or by whatever current is available to be drawn

(when saturated). As the transistor draws current pulses, over the

course of several RF cycles the resonant circuit increases the sine

voltage

amplitude across it, until reaching equilibrium either with the total

average current drawn by the transistor and the current delivered to

the load, or until the transistor saturates and the RF voltage gets

clipped to the supply voltage. MOSFETs conduct in both

polarities when driven on, and this will limit the peak RF voltage to

roughly the supply voltage, because the drain can't go significantly

negative. BJTs instead do not conduct collector-to-emitter when

reverse-polarized. But the base-collector junction does turn on, and

this pulls the base voltage down in such a situation, also limiting the

collector voltage swing. The clamped RF voltage, applied to the load

resistance, defines the load current, and this in turn limits the

average current that the transistor can draw. Of course, as the

conduction

angle of a class C amplifier is reduced, the peak current in the

transistor increases, for a given output power.

If a perfect (fully linear) transistor is biased for class B operation,

the amplifier will be linear, despite the transistor amplifying only

one half of the RF waveform, and staying completely off during the

other half. If a real-world transistor is used, which has a somewhat

nonlinear transfer curve, acceptable linearity for most

purposes can be achieved by biasing the transistor into class AB, with

the amount of idling current optimized for lowest distortion.

The very

fact that RF power amplifiers can be linear even when the transistor is

completely off during half of the time, puzzles many newcomers

to RF,

and this raises the need for another of my Blue Blocks:

Linearitity at RF and

audio - two different worlds

Audio fans are used to just one kind of linearity: The

amplifier is

supposed to deliver an output signal that is an exact copy of the input

signal, except that it has been enlarged. Since audio equipment needs

to process a wide wide frequency range, such as 20Hz to 20kHz, a

1:1000 range, and many individual frequencies are usually present

simultaneously over

this wide range, forming a complex waveform which is often asymmetric,

there is really just one way to achieve the required

linearity: At every instant the amplifier has to produce an output

voltage that is exactly proportional to the input voltage, the

relationship being set by a fixed gain factor which stays constant over

the whole frequency range.

But in most kinds of RF work, we don't have such wideband signals. A

typical ham signal might have a center frequency of 14320kHz, but a

total bandwidth of just 3kHz. That's a bandwidth of just 0.02%, so

narrow that on a scope it looks like a single frequency. For this

reason the waveform of a ham radio signal will always

be extremely close to a perfect sine wave, and since we know the waveform,

there is no need to make the amplifier painstakingly reproduce it. We

can allow the amplifier to brutally distort the waveform, and then use

a

frequency-selective network to eliminate the harmonic frequency

components,

which fully restores the original sinewave shape. The amplifier only

needs to create an output signal whose power varies in exact proportion

to the drive power, and that has the same phase modulation, if any, as

the input signal. If the amplitude and phase are right, and the sine

waveform is reconstructed by frequency-selective networks, then the

amplifier will be just as linear for a narrow RF signal as an

amplifier that offers perfect waveform reproduction of any input signal.

So, remember: If an amplifier accurately reproduces both the amplitude

modulation and the phase modulation of the input signal, it's a linear

amplifier for all narrow signals at RF. Waveform linearity is

absolutely not required, but typically the sine waveform needs to be

reconstructed by filters, to avoid unacceptable harmonic distortion

causing interference on higher bands.

Audio guys can't do that, given their complex, wideband signals. On the

other hand audio guys get the advantage of transistors that are

thousands of times faster than their signals, and that have extremely

high gain at their frequencies, so they can easily build

waveform-linear amplifiers. We RF guys have a much harder time

doing so, because the available transistors are slow and have low gain

at

our frequencies, and even a few cm of circuit board trace cause a

phase delay that could be many degrees of our signal's period. So we do

whatever

is simplest and most cost-effective in achieving our goal, and very

often this is building

amplifers that have extremely poor waveform linearity but good-enough

amplitude and phase linearity, and then we clean up their output

waveform using

resonant circuits or lowpass filters.

Since in most situations we need to design the amplifier for

a load resistance which is different from the drain load

needed to

produce the desired output power at the available supply voltage, we

need a matching network.

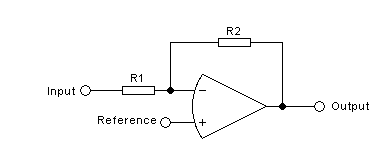

The simplest possible tuned matching network

is the L circuit. It's called L because it looks like a

lying-down letter L, consisting of either a series coil and a

parallel capacitor, or the other way around. The first option has some

lowpass behavior, the second one is rather a highpass. Since usually we

need to suppress harmonics, the lowpass version is by far the most used

one. The parallel element is always placed at the side of the L network

where the higher impedance is.

The equations for calculating the values for a lowpass L matching

network are the following:

Xl = √ ( Rlow × Rhigh - Rlow² )

Xc = Rlow × Rhigh ÷ Xl

Let's calculate the values for a 12V powered amplifier that delivers

30W into a 50Ω load, like in the broadband example given above. There

we found that we needed a transformer with a 1:5 turns ratio, giving us

a 1:25 impedance ratio, thus transforming the 50Ω load resistance down

to a 2Ω drain load. Let's do the same with a tuned L matching network:

Xl = √ ( 2 × 50 - 2² ) = 9.80

Xc = 2 × 50 ÷ 9.80 = 10.2

So we need a coil having 9.80Ω inductive reactance at the design

frequency, and a capacitor having 10.2Ω capacitive reactance. If we

want to build this amplifer for the amateur 6 meter band, we design it

for the center frequency, 52MHz. Applying the reactance equations given

higher up in this page, we end up with a 30nH coil and a 300pF

capacitor. Note that a 30nH coil might need just two turns of

wire, with a small diameter! This is why in amplifiers that work at

much higher frequencies than this one, such coils are no longer

practical, and circuit board traces (microstriplines) are used instead.

And the 300pF capacitor looks easy at first sight, but it's important

to make sure that it has negligible stray inductance. A capacitor with

wire terminals isn't a good idea here. A parallel combination of two or

more

SMD capacitors works well.

Such a circuit almost always will need to be tuned after building. It

is possible to use trimmer capacitors, while such small coils are most

easily trimmed by deforming them, mostly changing the separation of

their turns.

While we are at it, let's quickly calculate the resonant circuit at the

drain: For a loaded Q factor of 3, and given the drain load resistance

of 2Ω, we need reactances of just 0.67Ω! At 52MHz this translates into

an inductance of barely 2nH, and a capacitance of 4.57nF. The drain

"inductor" would be just 2 or 3mm of straight wire, while the capacitor

would need to be made from several very small SMD parts in parallel to

achieve low enough stray inductance.

So these are quite impractical values. I chose this example to

illustrate the sort of difficulties often encountered in RF power

amplifier design: Very low impedances, requiring impossible components.

An amplifier like this, delivering 30W from 12V with a pretty clean

sine wave at the drain and a simple L matching network, can often be

made in the lower HF range, but hardly at higher HF, let alone VHF.

That's one reason why VHF amplifiers typically either work in some

other class, such as class E, or else have low efficiency.

We can start making compromises, to get our 52MHz 30W amplifier to

operate well enough. The most obvious one is lowering the Q of the

resonant circuit, to end up with workable components. The problem is

that as we reduce the Q, the waveform at the drain distorts, and we can

no longer obtain the power we expect without getting high drain voltage

peaks. If we retune the matching network

to deliver the desired power while using a very low Q drain tank, the

result is sky-high drain voltage peaks, which in the best case reduce

the efficiency of the amplifier, and in the worst case destroy the

transistor.

Reducing the tank Q to unity, resulting in a 6nH coil and a 1.5nF

capacitor, we still get the desired power, at a peak drain voltage of

only 27V, instead of the 22V we got with a Q of 3. This is more

workable. Reducing it further, to 0.5, using a 12nH coil and a total

capacitance of 750pF, which includes the transistor's output

capacitance, the drain voltage waveform gets very ugly, but the circuit

still works well enough, and our component values start becoming

practical. Below that level the drain voltage waveform definitely

becomes too peaky, deformed, and the output power falls off

dramatically.

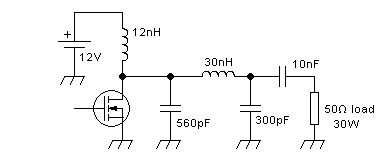

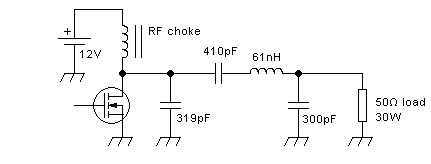

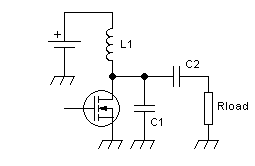

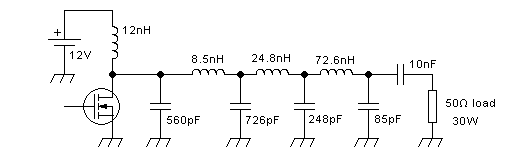

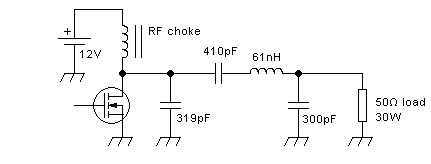

So, our 12V, 30W output section for the 50-54MHz band might end up

looking like this:

The 560pF is tentative, subject to confirmation of the output

capacitance of the transistor chosen. The 10nF capacitor serves just a

DC blocking function. Its reactance at the operating frequency is low

enough to have no significant effect. I placed it at the output side of

the L network, because there the RF current is much lower than at its

input side, and the DC voltage across the capacitor stays the same.

When building such an amplifier it's absolutely critical to minimize

stray inductances. The 560pF capacitor needs to be soldered directly to

the transistor's body, not one centimeter away. It needs to be a chip

capacitor, because any wire terminals at all will contribute too much

stray inductance. It's best to split it up into two capacitors of half

the value, and solder them to the transistor on both sides of the drain

terminal. Any deviation from this will make the amplifier behave less

like the calculation indicates. And even if you follow these

recommendations, you need to keep in mind that this design method is

very simplistic, for example by not considering the inductance of the

drain connection inside the transistor. One can usually get away with

such simplifications at HF, but at 52MHz it's risky, and on even higher

bands it definitely no longer works well enough. At VHF and higher one

needs to design the matching network for the load impedance the

transistor needs at its drain terminal, and not the one it needs

inside,

at its actual drain on the silicon chip. This load impedance needs to

be looked up in the data sheet, which usually gives it for a single or

a few operating frequencies and power levels. It often needs to be

interpolated, if your application is not a completely standard one for

the chosen transistor.

The L matching network is by no means the only choice for such a simple

amplifier operating in class AB or class C. In fact, very often there

are better choices. That's because the L network doesn't allow you to

choose the loaded Q of the network. The Q of an L network is

Q = √ (Rhigh ÷ Rlow -1)

So in this example the loaded Q is 4.9. One of the implications of this

is that the network has a -3dB bandwidth of roughly one fifth the

center frequency. The usable bandwith is even less, because 3dB power

drop at the band edges is not acceptable in a power amplifier. But

since the amateur 6 meter band has a width of one thirteenth

of

the center frequency, the bandwidth of this network with a Q of 4.9

would be sufficient. But there may be other applications in which more

bandwidth is needed, making a lower Q desirable. Or there might be a

more stringent requirement for harmonic suppression, making a

higher Q desirable.

Also it's important to consider the stress placed on the components. A

loaded Q of 4.9 also means that the network is storing 4.9 times as

much reactive power, as the output power is. So, this little network is

storing nearly 150W of reactive power! Even if you make the tiny 30nH

coil from really thick wire, it might still have enough RF resistance

(which is far higher than its DC resistance, due to skin effect) to

heat up so much that it unsolders itself from the circuit! You might

need really

thick wire

for it, or perhaps use silver-plated copper sheet. The silver

plating helps just a tiny bit, but using sheet instead of round wire

helps a lot.

Likewise, the 300pF capacitor is under high stress. It works at the

full output voltage of 39V RMS, but also at nearly the full input

current to the network, specifically at 3.8A RMS. You need a capacitor

rated for at least this much ripple current, and that's typically a

"transmission type" capacitor, such as a porcelain chip capacitor, or

at least a very high quality NP0/C0G chip type. If you use a

trimmer, it also needs to be able to handle that much current. A small

cheap flimsy little trimmer won't do it, but a big, hefty, sturdy one

will have way too much stray inductance. You need a small, high quality

trimmer made for transmitter applications. Which is why experienced

designers try to avoid using trimmers in such places.

If you would like to increase the loaded Q, for better harmonic

suppression, there are several networks that allow doing it. The most

common of these is the LCC network, meaning one coil and two

capacitors. It looks exactly like the circuit we already have, and the

only change is that instead of using a large value DC blocking

capacitor (10nF in my example), we reduce the size of this capacitor to

make it an important part of the matching network. To design this

network, you pick the value of loaded Q you want, which must be higher

than the Q the L network would have, and then you use these equations:

XL = Q × Rlow

XC1 = Rlow ( 1 + Q² ) ÷ ( Q - √ ( Rlow ( 1 + Q² ) ÷ Rhigh -1)

XC2 = Rhigh √ ( Rlow ( 1 + Q² ) ÷ Rhigh - 1 )

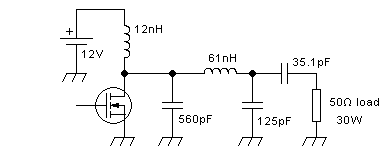

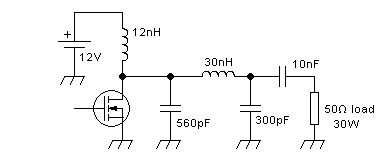

Applying these equations to our example of the 12V powered 30W

amplifier for 52MHz, and setting the Q at 10 (which is higher than

usual, but OK for this example), the inductor turns out to need a

reactance of 20Ω, and thus an inductance of 61nH. The first capacitor

needs to have 24.46Ω of reactance, which results in 125pF at this

frequency. And the second capacitor, the one that was just a coupling

capacitor when using an L network, now needs to have a reactance of

87.2Ω, and thus a capacitance of 35.1pF.

Hint: When using equations like the ones above, be very careful about

using the correct precedence of operations! While that's just sixth

grade maths or so, it's still easy to make a mistake.

Our amplifier's output section, with the loaded Q changed to 10, ends

up

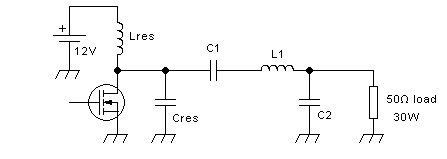

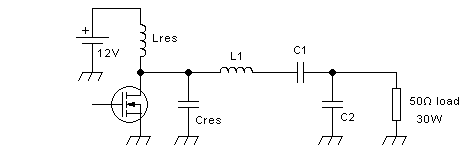

looking like this:

Another way to raise the Q is to place a series resonant circuit in

series with the inductor of the L network. The two inductances that end

up in series can of course be melted into a single inductor of the

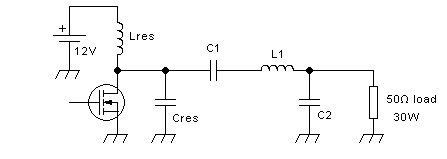

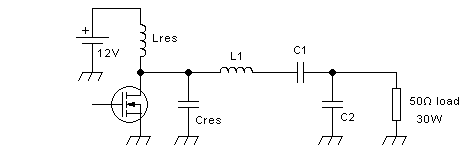

total inductance value. The circuit then looks like this:

or like this, whichever you like best:

The design equations for this network are the following:

XC1 = Q × Rlow

XL = √ ( Rlow × Rhigh - Rlow²) + XC1

XC2 = Rlow × Rhigh ÷ (XL - XC1)

So, for our 12V 30W 52MHz amplifier, designed with a Q of

10, XC1 is 20Ω, XL is 29.8Ω, and XC2 is 10.2Ω. This equates to

153pF, 91.2nH, and 300pF. Lres and Cres of course are resonant with

each other at the operating frequency, with a Q high enough to maintain

a reasonable sine wave, and low enough to not limit the bandwidth too

much and to have practical values, and Cres includes the transistor's

output capacitance.

The -3dB bandwidth of these networks with Q = 10 will be only 5.2MHz,

so they are really a bit

too narrow to cover the entire 6 meter band with near-flat response. Q

factors as high as 10

are rarely used in solid state amplifiers. They were more typical in

tube amplifiers, where a high Q was necessary to allow the high

impedance ratios needed there, and to absorb the sky-high output

capacitance of tubes. But tube amp users had to pay for that

by tuning up their transmitters to the specific frequency they wanted

to transmit on.

In solid state practice it's more common to try achieving larger bandwidth,

be it to cover a wide band without retuning, such as 88 to 108MHz for

a frequency-agile FM broadcast transmitter, or be it to

mass-manufacture a design

without having to individually tune up each unit. More broadbanded

matching networks are less critical in terms of component tolerances

and accurate placement on the board.

There really aren't too many options when it comes to making low Q

tuned matching networks. The best approach at HF and low VHF is

probably cascading several L networks. In this case each L network has

a much lower Q than a single L network, and the combination of all of

them has a higher Q than the individual L networks composing it, but

lower than a

single L network that does the entire transformation.

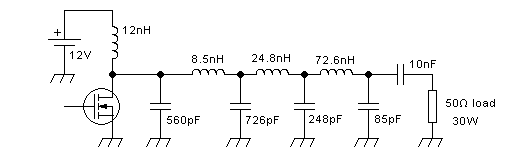

Let's try modifying the amplifier to use this approach, with three L

networks cascaded. Each of them will transform over the same resistance

ratio. Since the total ratio is 1:25, each L network needs to have a

ratio of 1: 2.924, because the cubic root of 25 is 2.924. And each L

network will then have a Q of 1.39. So, our first L network

matches 2Ω to 5.85Ω, the second one matches 5.85Ω to 17.1Ω, and the

third one matches 17.1Ω to 50Ω. Applying the equations for the L

network to each stage, the circuit ends up like this:

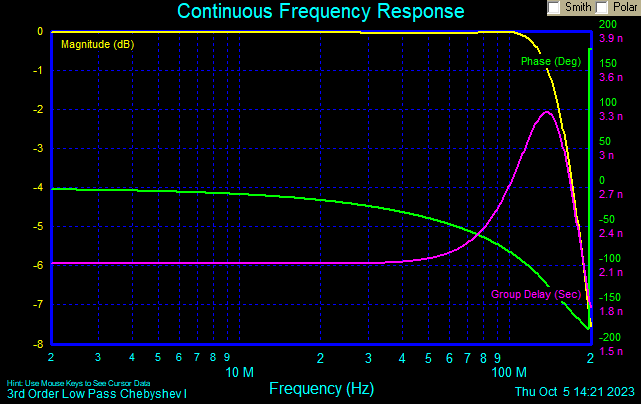

The -3dB bandwidth of this circuit is nearly 3 times wider than for the

simple L network. And the -1dB bandwidth, which is a more useful

characteristic for power amplifiers, improves more than threefold, from

around 10MHz to 33MHz.

The inductances in such a circuit get so small at VHF that they are

usually implemented as a single microstripline on the printed circuit

board, with the capacitors soldered to that strip at

strategically selected locations, to implement the proper

inductance between each capacitor and the next one. Sometimes the width

of the microstripline is adjusted for the different inductance values:

It starts very broad at the transistor, and then narrows down after

each capacitor. At HF instead

discrete coils can be used, but it's not very common to use this sort

of

wideband tuned amplifier at HF, because single HF bands are usually

narrow enough to use a simpler matching section, while to cover several

bands one needs a true broadband amplifier anyway. Instead a very

common application for such a network is a commercial VHF radio

covering 136 to 174MHz.

The tuned matching networks that I have described here just barely

scratch the surface, but tend to suffice for typical practical

amplifiers. There are many other possible configurations, including Pi

and T circuits. But not all of those lead to practical component

values. And there are many web pages devoted exclusively to impedance

matching, and some of them include online impedance matching

calculators, so if you are particularly interested in tuned impedance

matching, you should search and read some of those pages.

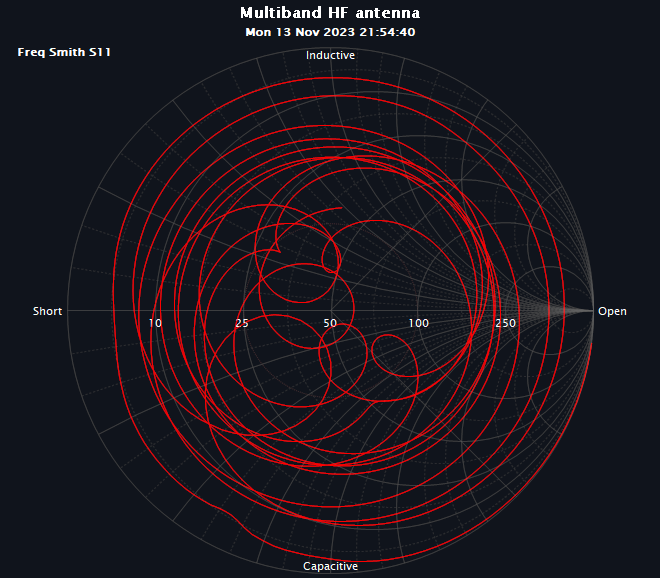

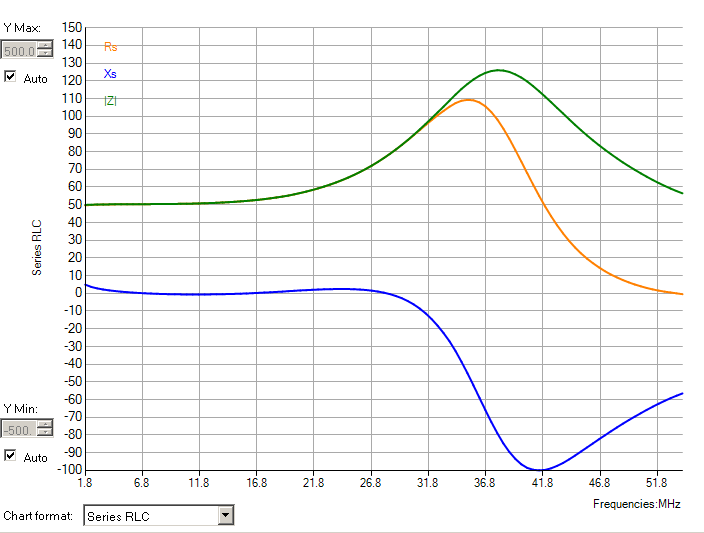

One more comment is due at this point: The data sheets of RF power

transistors give the required load impedances,

not just load

resistances, because a typical transistor does need some reactance in

the load at most frequencies, to compensate for its output

capacitance and for its lead and bonding wire inductance. It's a simple

matter to take the series-expressed complex impedance value from the

datasheet, design your matching network for the resistive part of that

impedance, and then add the required reactance in series with the input

of your network, by either reducing or increasing the first inductor's

value by the appropriate amount, depending on wether the transistor

needs a capacitive or inductive component in the load impedance.

People who are too lazy to do these maths will simply design an

approximate matching network, and make two of its elements adjustable.

Then they will tune up their amplifiers for best power output and

efficiency, by adjusting trimmers or deforming coils. In the case of

amplifiers for HF, it's often practical to use coil formers

with

adjustable slugs in them.

All of these tuned output sections are suitable for use with all

traditional classes of operation: A, AB, B and C. Instead the

single-transistor broadband configuration is really good for

class

A only.

Class E

No, don't worry, I do know the alphabet, and I haven't

forgotten

class D. But class D amplifiers most commonly use two transistors

rather than one, and we aren't there yet, so I will treat them later.

Class E amplifiers instead are commonly built around a single

transistor, and look extremely similar to the tuned amplifiers we were

just busy with, and so it makes a lot of sense to treat them now and

here.

Class E amplifiers were developed to improve the efficiency beyond that

of a class C amplifier. They always operate with the transistor driven

into full saturation and full cut-off, either by using a squarewave

drive signal, or by strongly overdriving them with a sinewave. This is

the first step in achieving high efficiency, because a fully saturated

transistor can only cause a small conduction loss, and a transistor

that is off cannot cause any significant loss at all. Class D

amplifiers

do the same. But there is a big problem with class D: Transistors

simply cannot switch fast enough to keep the transition time

insignificantly small, at radio frequencies. Class E tries to eliminate

most of the switching loss by using an output network that shapes both

the drain voltage waveform and the drain current waveform, trying to

achieve a drain voltage as close to zero as possible while the

transistor is switching on, and trying to keep the drain current as

close to zero as possible while the transistor is switching off.

The circuit required to do this is almost the same as in the amplifiers

we were just looking at. The main difference is that since we will be

controlling the drain voltage waveform with our matching network, we

don't want any other resonant circuit at the drain. So we replace the

resonant inductor that feeds the supply current to the drain by an RF

choke, which forces a constant supply current over the RF cycle,

allowing

strange and even abrupt drain voltage waveforms.

Did I say "RF choke"? Yes. And while discussing class A

broadband amplifiers, I talked just about chokes. Time for another Blue

Block:

Chokes and RF chokes:

A choke is an inductor of such a high inductance value that it blocks

all alternating current that would want to flow, as much as possible.

That is, a choke carries essentially a pure DC at all times, as far as

imperfections and design compromises allow.

But a choke in a real-world circuit really doesn't need to fully block

all frequencies from a femtohertz to a petahertz and beyond. Nor could

we make such a choke. In practice, we just need the choke to block the

frequencies that we want blocked. Sometimes this could be all

frequencies present in a circuit, but at other times we might want a

choke to block a certain (usually broad) range of frequencies, but pass

another (very different) range of frequencies.

In RF amplifier work we are usually dealing with two such broad

frequency ranges. One of them is radio frequencies, usually spanning

from the lowest frequency of operation, to the highest important

harmonic of the highest frequency of operation. For an HF amplifier

operating from 1.8 to 54MHz, the RF range to block might span 1.8 to

500MHz or so.

The other frequency range we must not forget is the envelope

frequencies. That is, the frequencies contained in any amplitude

modulation of our signals. In an AM signal, this range is directly the

audio frequency range our transmitter passes. In SSB the range is

wider, theoretically infinite, but from a practical point of view the

important components extend to about 10 times the highest audio

frequency our transmitter passes. Even a Morse code signal contains a

significant modulation bandwidth, which must be cleanly processed by

the amplifier to avoid deformed keying and the resulting key clicks.

In RF power amplifiers usually all chokes need to block the RF range.

We rarely if ever use chokes that deliberately have to block the

modulation frequencies, since such chokes would be big, heavy,

expensive, and inefficient. But for chokes in some positions

it's irrelevant whether or not they block the modulation frequencies,

while for others it's essential that they don't block them! In my

opinion it's important to be aware, during amplifier design, whether a

choke must freely pass modulation frequencies, or whether it doesn't

matter how well it passes them. If you use a choke that noticeably

affects the higher modulation frequencies, in a location where the

choke needs to pass them, the choke will distort the transmitted

signal! Many people have run into distortion trouble they don't

understand, due to using a choke that has too much inductance and thus

affects the higher modulation frequencies.

For this reason, throughout this article I'm making an intentional

difference between chokes and RF chokes. When I use the term "choke", I

mean an inductor that reasonably blocks all RF current, without you nor

me needing to care whether or not it also has a noticeable effect on

modulation frequencies. Instead when I write "RF choke" I mean an

inductor that has enough inductance to block RF currents well enough,

while having low enough inductance to pass all modulation frequencies

without any noticeable impact on them.

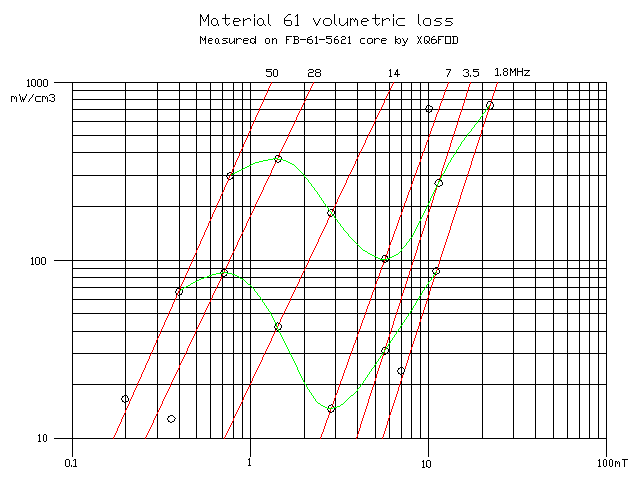

There is another point about chokes that I would like to briefly

mention: Many chokes are far from having a pure inductance.

Instead they have an impedance, that is, inductance in series with

resistance, and both the inductance and the resistance change with

frequency. Some chokes are almost purely resistive at the higher

frequencies. This behavior is fine, as long as the resistance is high

enough to keep the losses acceptable. After all resistance dissipates

power, while inductance does not.

It is often even desirable for a choke to be more resistive than

inductive, because this reduces the risk for resonances, reduces

phase shifts, and thus contributes to amplifier stability. But of

course any choke needs to have a low enough resistance to DC, and RF

chokes also need to have a low resistance to all

modulation frequencies too.

The amount of impedance a choke needs to have, of course depends on the

circuit impedances. A typical rule of thumb is making sure that the

choke has at least 10 times as much impedance as the circuit it's

connected to, at all frequencies in the range it has to block, but

specific applications might need even better choking, while others can

work well with a significantly lower choking impedance. Typically if

the choke's impedance is mainly resistive it needs to be higher than if

it's mostly inductive, to keep the losses at an acceptable

level. A factor of 100 is more common than 10, for a mostly

resistive choke.

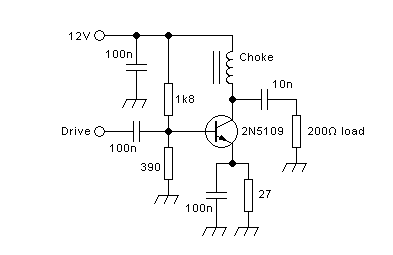

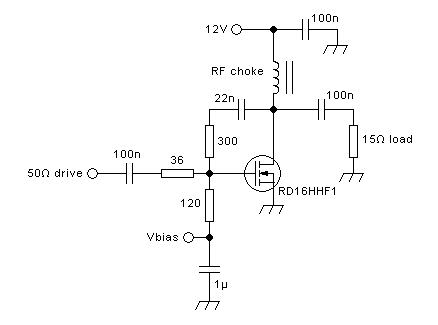

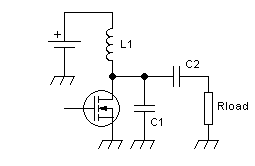

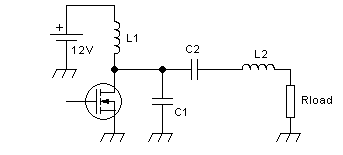

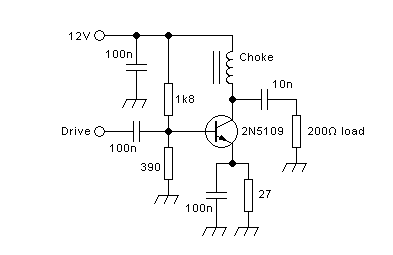

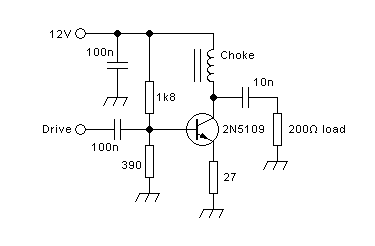

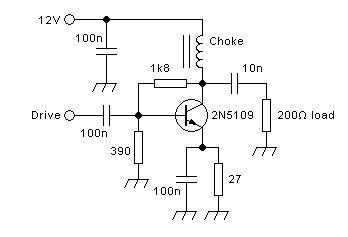

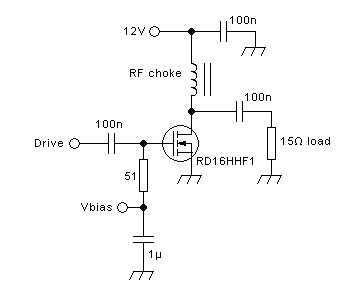

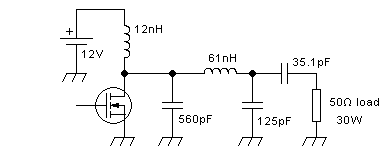

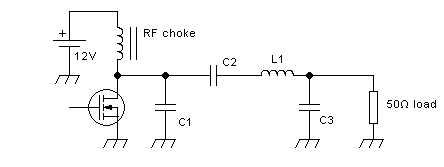

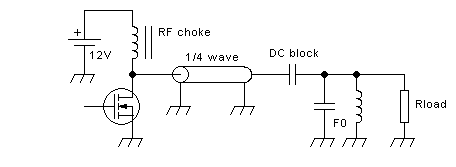

A typical, practical, class E amplifier's output section will look like

this:

No big surprises there, huh? You might ask what's new at all!

The

only difference that can be seen, between this class E amplifier and a

typical tuned amplifier that can be run in class A, AB, B or C, is the

use of an RF choke instead of a small tuned inductor. Even

this

difference isn't absolute, because class E amplifiers can be designed

to work with a small tuned inductor there too, although they work the

cleanest way when an RF choke is used, that is, the current supplied to

the circuit is forced to be a pretty smooth DC over the entire RF

cycle.

The main difference is in the way to calculate the component values.

The design equations for a class E amplifier are pretty complex, and

that's why I won't copy them here, and rather suggest that you use any

of the several online calculators and design programs that exist for

class E amplifiers. Most of these calculators, and also the equations

given on websites, are applicable to a textbook circuit like this:

You can punch the values you want for voltage, frequency, and power

into one of those online calculators,

including the value you pick for L1, and get values for C1, C2, L2 and

Rload. If you pick a relatively large inductance for L1 the circuit

will work better. A very low value for L1 might make you end up with

the need for negative capacitances or negative inductances somewhere...

And yes, Rload is calculated too, and would be 2Ω or very close to it,

if you try our exercise of a 12V 30W 52MHz amplifier. If you need a

different load, you have to add a matching section to that circuit. The

simplest one is the L, of course, so you calculate an L circuit to

match 2Ω to 50Ω and add it, and since that makes you end up with two

inductors in series, you can merge the two into a single inductor that

has the total inductance value.

Doing this for our 12V 30W 52MHz example, choosing a Q of 5

for the

basic class E network, and using an RF choke that has high impedance at

52MHz, makes us end up with these values:

Don't forget that you need to drive this transistor with a squarewave,

or with a pretty strong sinewave. You will be rewarded with a high

efficiency, that could be as high as 90% if you use a good transistor,

but you also have to be aware that the normal voltage peaks at the

drain will be at least 3 times the supply voltage, and during transient

conditions could be even higher. So you need a transistor with a higher

voltage rating in this circuit, than if you use a very similar looking

circuit operating in class A, AB, B or C with a sinewave

voltage

at the drain that doesn't even reach twice the supply voltage.

Many designers have tried to make a class C amplifier, for example for

an FM transmitter, and then have empirically tuned and tweaked the

matching network, while putting nice strong drive to the transistor,

until reaching efficiencies above 80%. In fact they ended up with class

E amplifiers, without even knowing it!

Since class E amplifiers operate in saturation, any amplitude

modulation of them, even on/off keying for Morse transmission, should

be done by modulating the supply voltage with a suitable waveform,

while keeping the drive signal going.

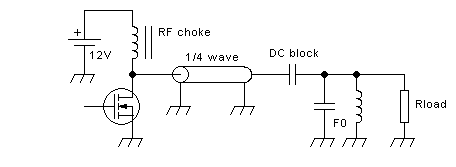

Class F

In order to get higher power from a given transistor, without exceeding

its drain voltage rating, someone came up with the idea to shape the

drain voltage waveform into a square wave, by designing an output

network that offers a short circuit to all even harmonics, but an open

circuit to all odd harmonics. This was done by placing a parallel

resonant circuit in parallel with the load, which offers a short for

all harmonics, and then connect it to the transistor via a

quarter-wave-long transmission line,

like this:

A quarter-wave-long transmission line has the interesting

property

that on all even harmonics it reflects the same load impedance

it

sees at its end, while on all odd harmonics, and the fundamental, it

transforms the

impedance it sees to its image value as seen from the line impedance.

So, if we have a load resistance

of 50Ω, a resonant circuit tuned to the working frequency, and a

quarter-wave-long 10Ω transmission line, then at the working frequency

the

drain will see a 2Ω load. At all even harmonics it will see the short

circuit offered by the resonant circuit. And at all odd harmonics it

will see the short circuit of the resonant circuit transformed by the

line into an infinite impedance, that is, an open circuit. So the drain

voltage will become a square wave, and the drain current too.

In

theory it all works very

nicely, and the efficiency should be very high, along with a

higher power output from a given transistor, if the proper

supply voltage is chosen. In practice however it's hard to get a

transmission line at UHF to perform just right. It would need to be

very thin, and then it can't handle high power. On HF instead such a

line would be too long to be

practical. And anyway, RF transistors don't like square wave voltages

on their drains, because that causes large losses due to the need of

charging and discharging the transistor's output capacitance at a very

high rate, and due to internal parasitic BJTs in MOSFETs turning on at

these very fast drain voltage transitions. So I don't see class F

amplifiers as being very useful in

the context of this article.

To make a class F amplifier of a sensible size for HF, instead of the

transmission line one needs to use several parallel-tuned circuits

(traps),

each tuned to one odd harmonic, installed in series in place

of

the transmission line, and then one needs to add an L network for the

impedance transformation. This tends to become too complex to be

practical, specially considering that the network will only

cover a single band, and the losses in the several tuned

circuits

tend to eat up any

advantages the circuit might otherwise have. So it's not common to see

class F amplifiers used for HF.

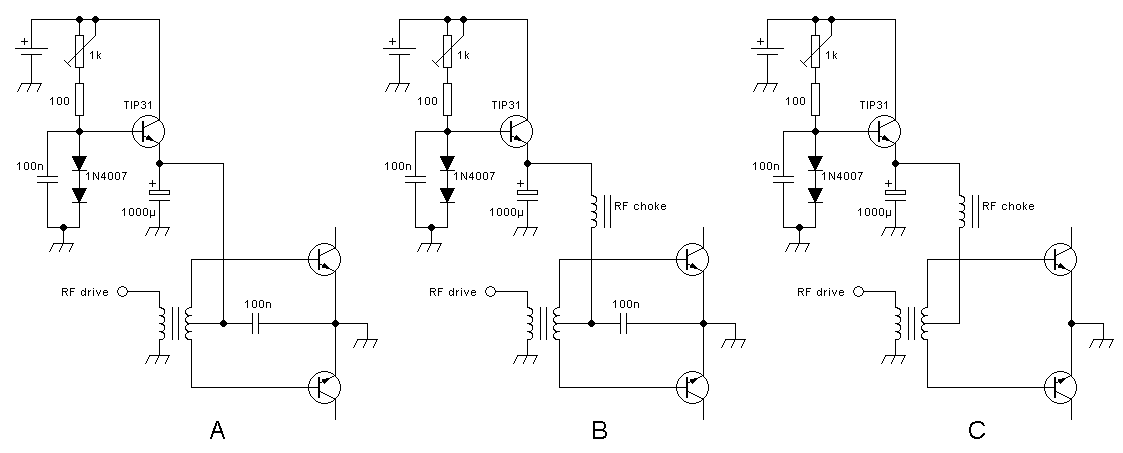

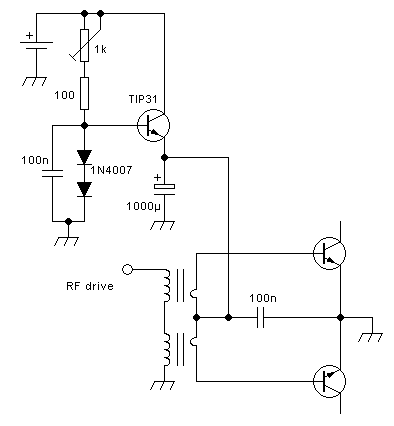

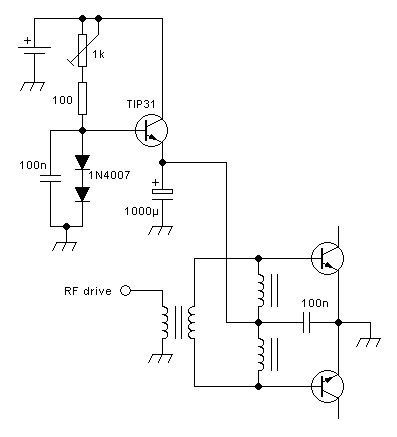

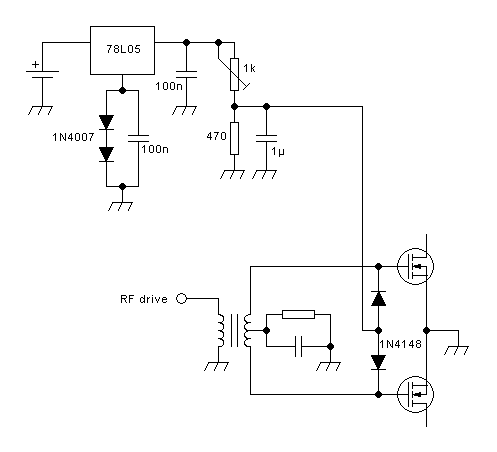

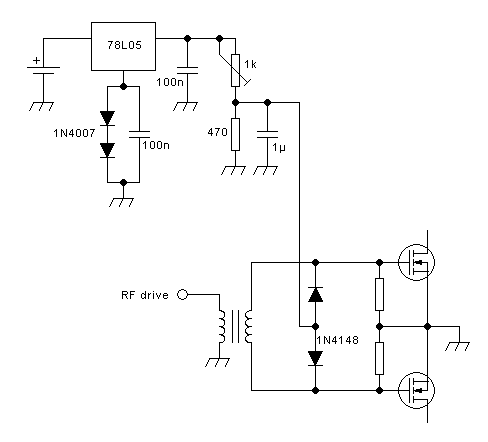

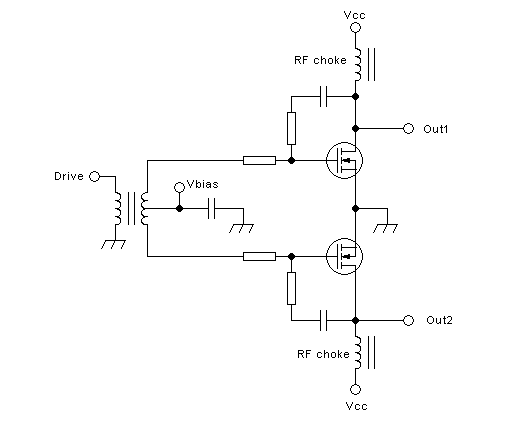

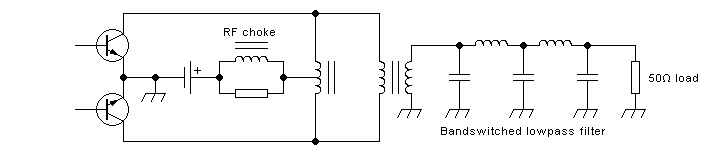

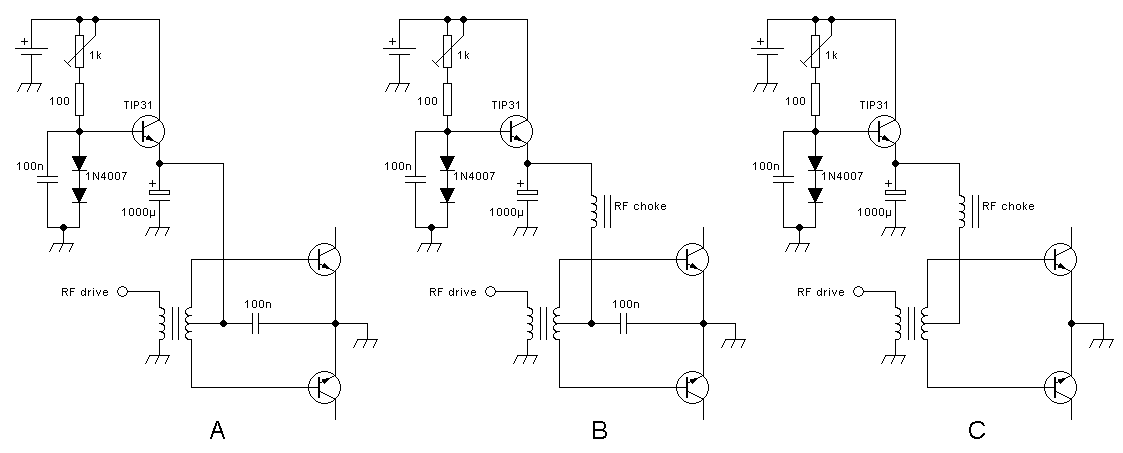

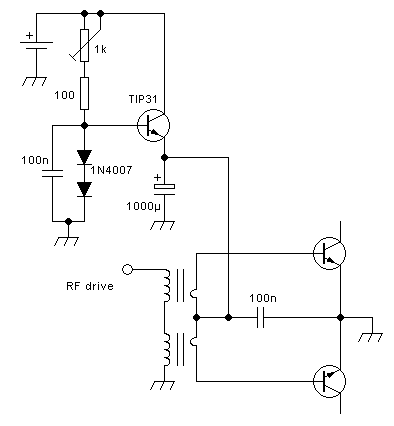

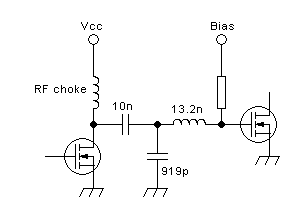

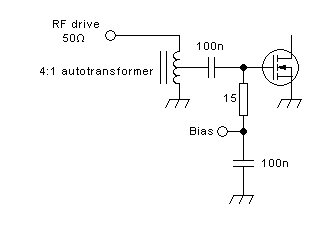

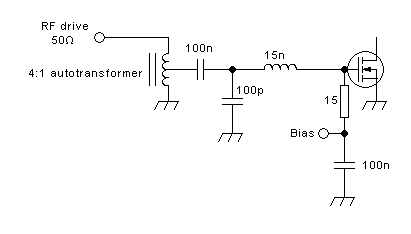

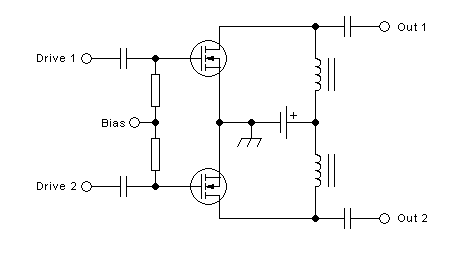

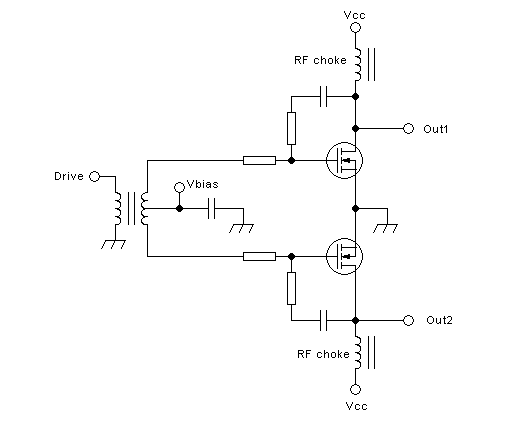

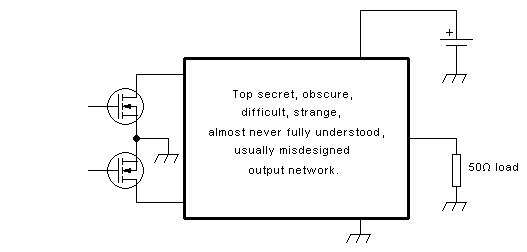

Broadband push-pull amplifiers:

Most HF amplifiers for radio purposes are broadband ones, not because

any broadband signals are to be transmitted, but because operation on

several bands is required. And given that single-transistor broadband

amplifiers need to run in class A to avoid massive harmonic output, and

class A is very inefficient, most broadband linear amplifiers for power

levels

above a few watt are usually push-pull designs, which provide better

efficiency by running in class AB or class D, or a

hybrid between those two.

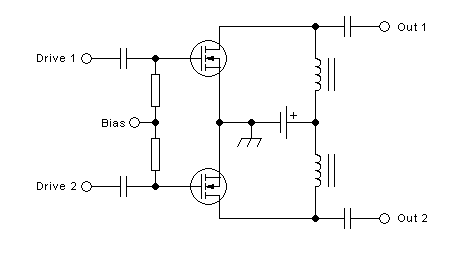

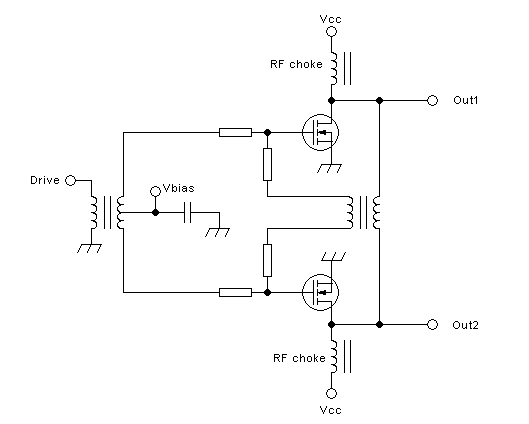

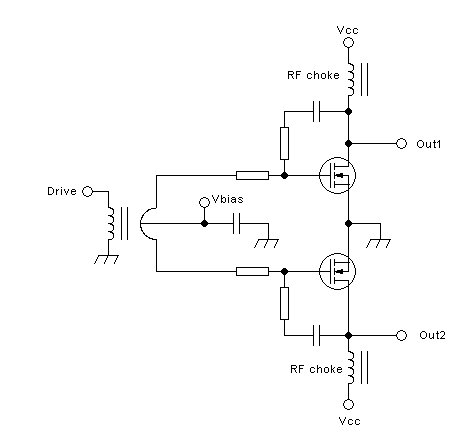

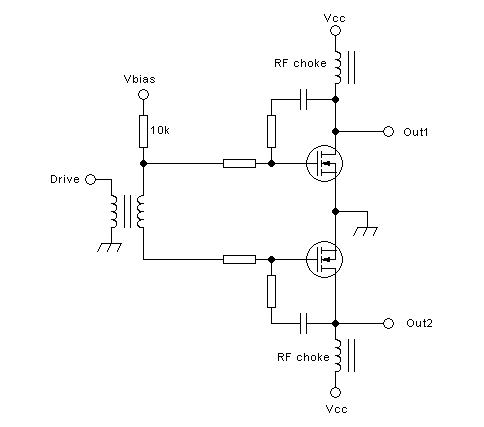

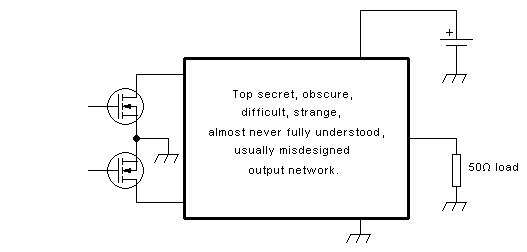

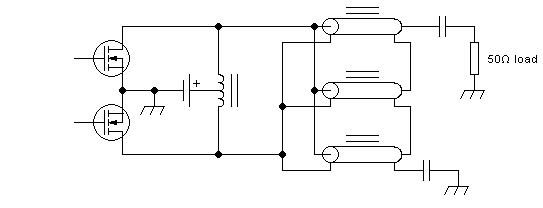

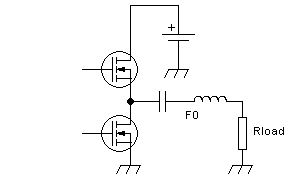

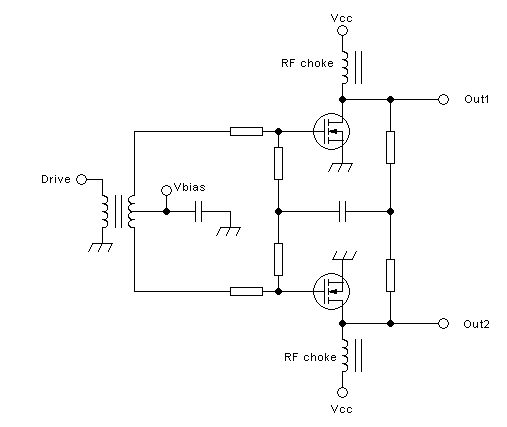

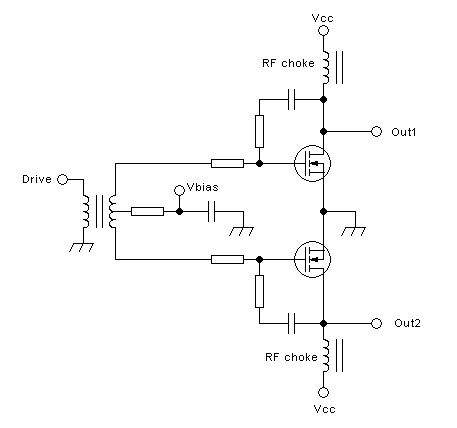

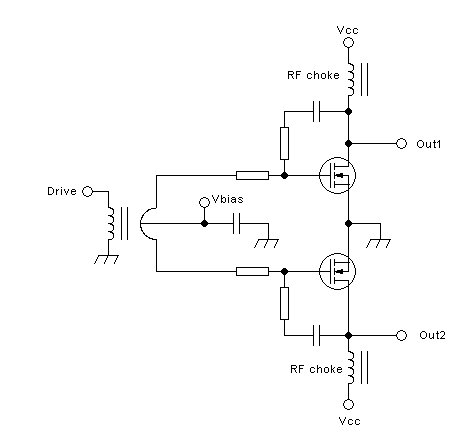

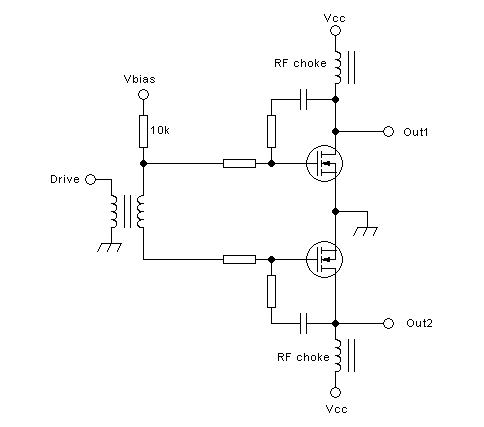

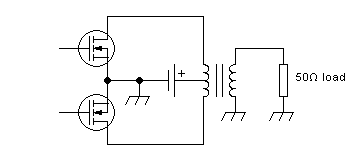

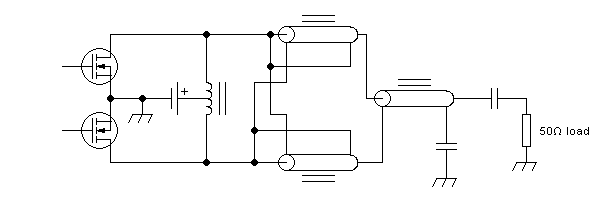

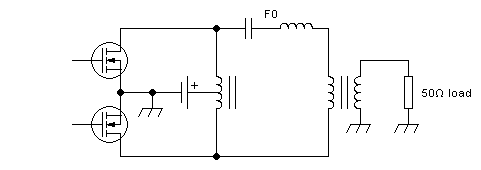

The general form of a push-pull amplifier's output section is like this:

If you try to look up that output network in books about RF power

amplifier design, you will hardly ever find anything useful, either

because the book doesn't treat HF, or because the author has no faint

idea about the matter. If a book actually describes such a network, the

description is usually copied from an older book or magazine article,

and the book author copied it with all of the original faults and

misunderstandings. If you look into transistor datasheets and

application notes, you will find very few broadband push pull circuits,

because the application engineers at those companies don't understand

them either, get poor results when trying to build any, and the company

then prefers to publish some narrow-band design that works better and

thus puts their transistors in a better light.

So, let's tackle this matter piece by piece. The method is: Divide and

conquer. A very old and well-proven approach.

A push-pull output network needs to perform several functions. Some of

them are the same as those a network for a single-transistor amplifier

needs to perform:

- Inject the power supply to the transistors.

- Transform the load resistance to whatever value is needed between the

drains, for the available supply voltage and the required output power.

- Block the DC from reaching the load.

In addition, a push-pull output network has some additional tasks:

- Act as balun, to transform the single-ended load into a balanced load

between the drains.

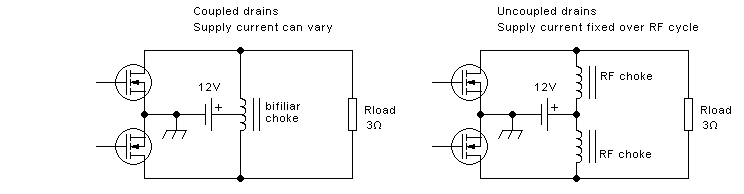

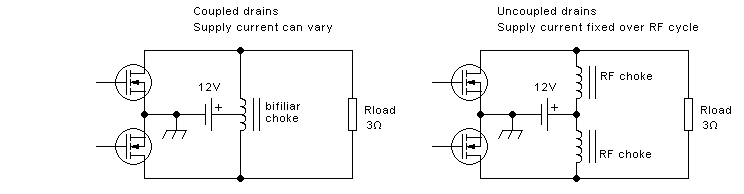

- Tightly couple the drains together, in phase inversion. This function

is required for class AB and voltage-switching class D operation. In

class A it's not required, but doesn't hurt if present. For

current-switching class-D operation it must be absent.

- Restore a sinewave signal, through bandpass or lowpass filtering.

This is required in class D, but optional in class A and AB, depending

on whether the amplifier can provide the required harmonic suppression

just by operating very linearly. Most often it cannot...

- Properly handle or suppress common-mode signals. This may or may not

be necessary, depending on things like the type of feedback employed,

if any, the drive method, and the linearity of the transistors. I will

treat this point when getting to driving and feedback circuits.

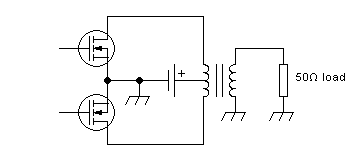

A very simple and convenient way to implement all of these tasks,

except filtering, is to employ a conventional transformer having a

center-tapped primary:

Since this circuit provides drain-to-drain coupling, but no filtering,

it's suitable for class A and class AB. If a filter is added, it can be

used for voltage-switching class D too. It could then also be

used

for class C, but that's rarely done. And class B is theoretically also

possible, but as explained way above, real-world circuits can never

truly work in class B.

The big problem with this nice and simple circuit is that its practical

implementation gets ever harder as the frequency gets higher, the

bandwidth gets larger, the power gets higher, and the supply voltage

gets lower. This is caused by the shortcomings of real-world

transformers, which never have perfect coupling. As the

frequency gets higher, the transformer needs to get smaller. But

smaller transformers might not offer enough inductance for operation on

low frequencies, might not handle the RF voltages without

excessive core heating, or might not allow using thick enough wire for

the currents that flow. At a given power, a higher supply voltage makes

leakage inductance less critical by a square factor, but the core needs

to be much larger too, or more turns need to be used, both of

which increases the leakage inductance and also the parasitic

capacitances.

If we had really good magnetic core materials, life would be

much

easier. But with the materials available today, it's hard enough to

make such a transformer that works well from 1.8 to 30MHz in a 12V 10W

amplifier, and I wouldn't know how to reasonably build such a

transformer for a 1.8-54MHz, 12V 100W amplifier. Let alone a 1.8-54MHz,

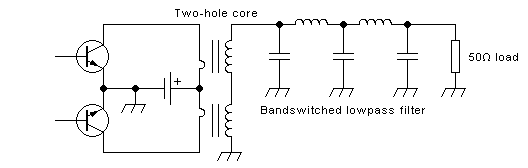

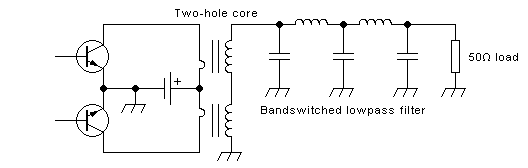

50V, 1.5kW one! Many 100W output stages use a conventional

transformer that has a two-hole core and a single-turn primary, but

this is not

the correct type of transformer for this circuit, because it

offers no coupling whatsoever between the halves of the primary. I will

get to that transformer, and its legitimate uses, just a little further

down this page.

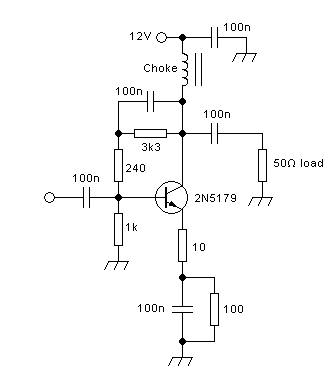

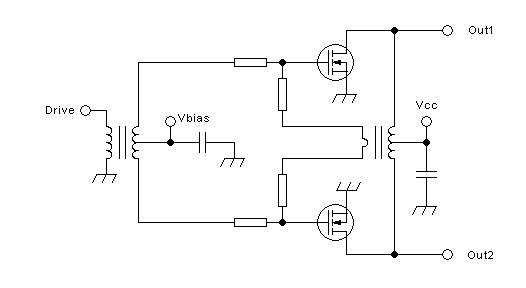

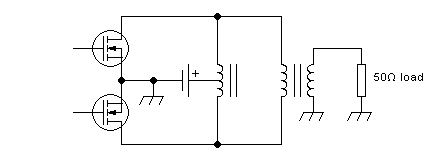

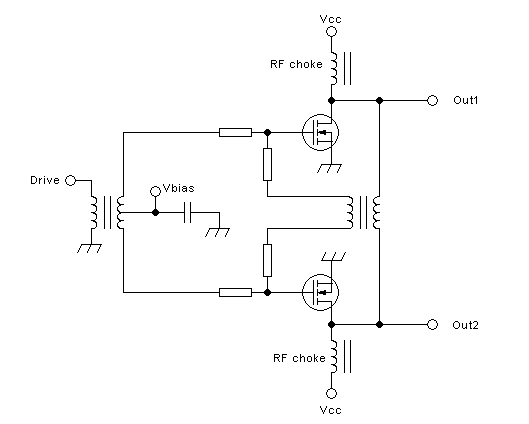

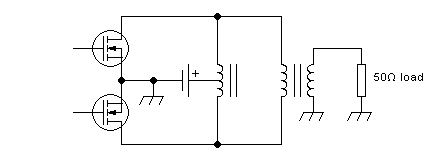

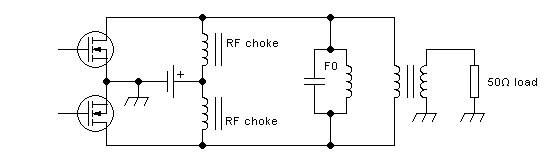

One way to make the situation more manageable is to split up the

functions of the output network between two magnetic devices. One of

them is a thing sometimes called a "bifiliar choke", also called

"balanced feed transformer", and several other names, which injects the

power supply and couples

the drains. The other device is a conventional transformer that

performs the load resistance matching, isolation and balun

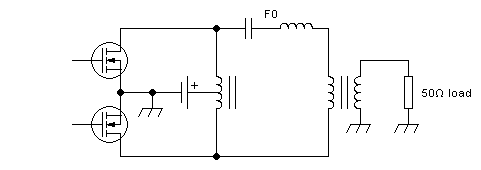

functions. The circuit looks like this:

Some people prefer to draw the feed transformer in some other way that

suggests its bifiliar construction, such as this:

But I feel that this looks like scratching my left ear with my right

hand, so I prefer the other drawing style, which also suggests very

well that this feed transformer performs some of the same functions as

a center-tapped primary winding.

Splitting up the functions between two transformers makes the task

easier, but still not really easy. In amplifier configurations

requiring drain-drain coupling, it's still hard enough to make a

bifiliar feed transformer that couples well from 1.8 to 30MHz, and very

hard if you want to get to 54MHz, as soon as the power level of the

amplifier is higher than about 10W at 12V, and 100W or so at 50V. It's

common to see ferrite toroids wound with 5 to 10 bifiliar turns, but

these suffer from so much leakage inductance that they don't properly

couple the drains in the higher part of the HF range.

By the way, a twin-hole core with a single-turn primary is perfectly

fine as the impedance-transforming, balancing, and DC-isolating device,

when combined with an effective bifiliar feed transformer - within its

frequency limitations, of course.

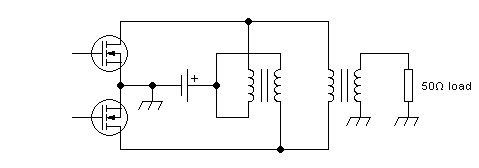

Instead of a conventional transformer it's possible to use

transmission-line transformers to do the load resistance matching and

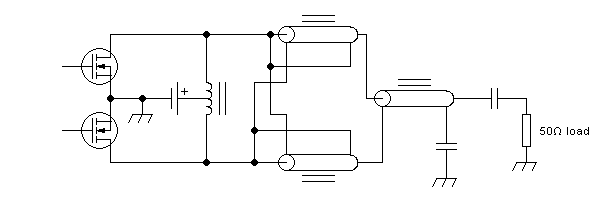

the balun action. An output section using a TLT with a 1:9 impedance

ratio

might look like this:

This circuit places a load resistance of 5.56Ω between the drains, and

also performs balun action. The true transmission line transformer has

a very wide frequency response, so one can pretty much stop worrying

about its

upper frequency limit. Each of the three transmission lines needs to

have a 16.7Ω impedance. The three lines in parallel give 5.56Ω at the

input, and the three lines in series give 50Ω at the output. If lines

of a wrong impedance are used, the transformer will still work at low

frequencies, but will become increasingly bad as the frequency rises,

because the lines will start doing some unwanted impedance

transformation on their own.

The uppermost line works at the highest end-to-end common-mode voltage,

so it also places the largest stress on its core. It should either have

the largest core, or the most turns. The lowermost line has the lowest

choking requirements, so it can use the fewest turns or the smallest

core. Since the lines should all have the same length, to maintain high

frequency performance, the best economical compromise is to use the

same length of line for each of the three, but use different number or

sizes of cores in each.

Since a TLT doesn't provide DC blocking, suitable blocking capacitors

have been added. I put them on the output side, where the

current is lower.

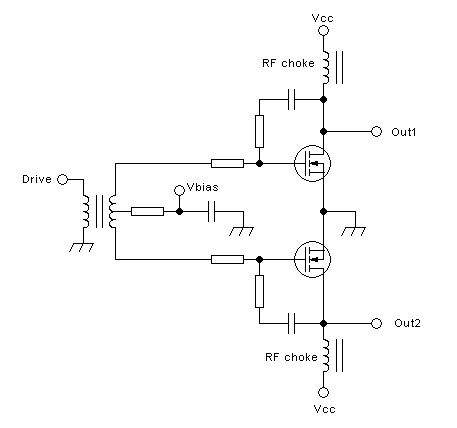

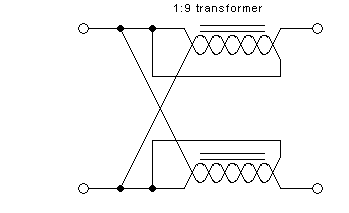

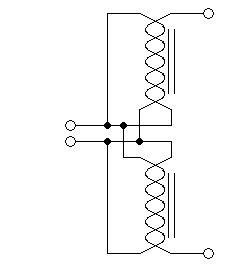

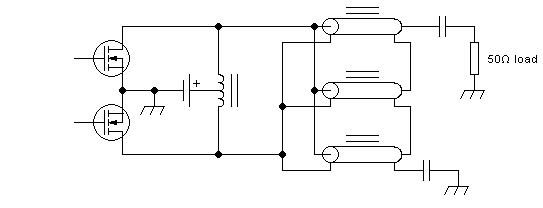

Sometimes a different configuration is used to achieve the same

purpose. The 1:9 transformer is implemented in a bootstrapped

configuration, saving one transmission line and its core. But then the

transformer doesn't provide balun action (common-mode current

blocking), and needs a separate balun (common-mode choke) added! The

circuit looks like this:

The two lines of the impedance-matching transformer now work at the

same end-to-end voltage, and thus are made identical. The balun works

at a higher end-to-end voltage and thus needs a larger core or

more turns.

Note that "larger core" in this context means a larger ferrite

cross-sectional area.

One could ask what point there is in replacing a true transmission line

transformer using three lines and three cores, by a bootstrapped

version that needs the same number of lines and cores but has a

definite and rather low upper frequency limit. The explanation is that

the latter version needs only 7/9 as much total ferrite cross section ×

turns product, as the former one! So, when every penny

counts, the latter version is cheaper, and might get the job done, if

the required top frequency isn't too high. But often it's impractical

to take advantage of this, and designers choose the simpler

solution of using only one type of core. Also the former

version has the flatter frequency response.

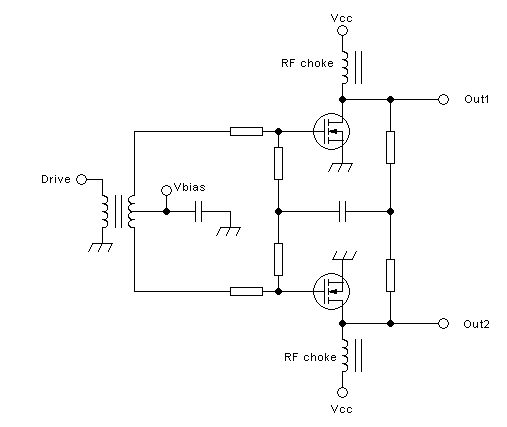

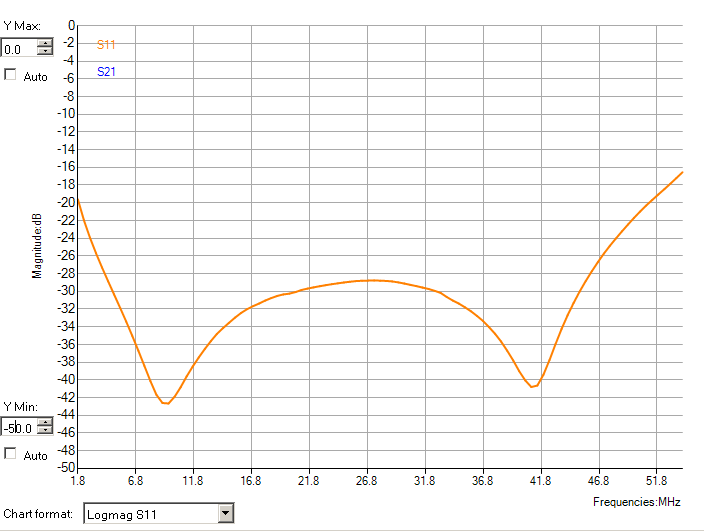

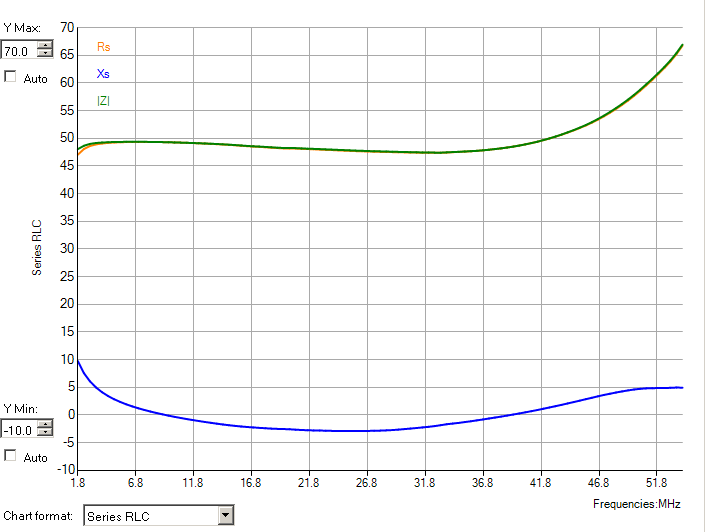

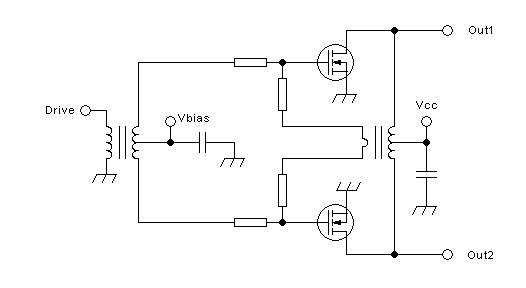

Now that we have a good solution for a very wideband load matching

system, it's time to look once more at the remaining trouble spot: The

coupling between the drains. Since it's too difficult in practice to

make split-primary transformers that produce the required excellent

drain-to-drain coupling, and in most cases with mid and high power

amplifiers operating to 54MHz it's also very hard or impossible to make

a bifiliar feed transformer that does this job, we are often forced by

practical reasons to consider amplifier circuits that don't need any

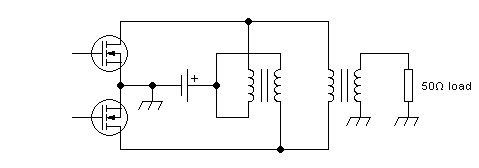

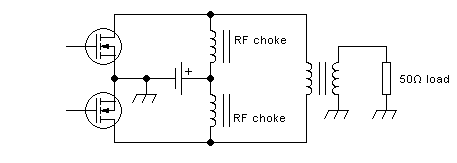

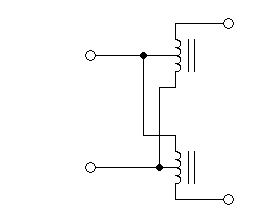

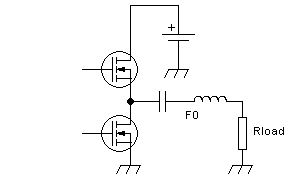

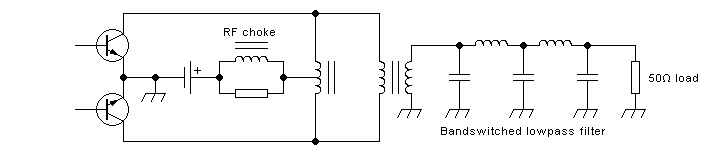

drain-to-drain coupling at all. For example, consider this output

section:

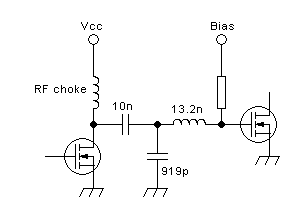

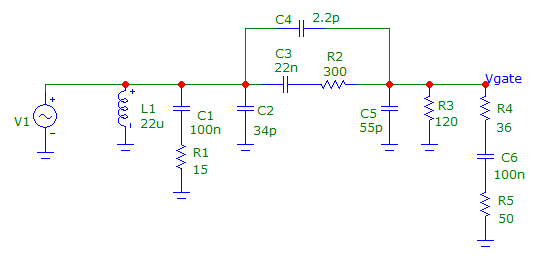

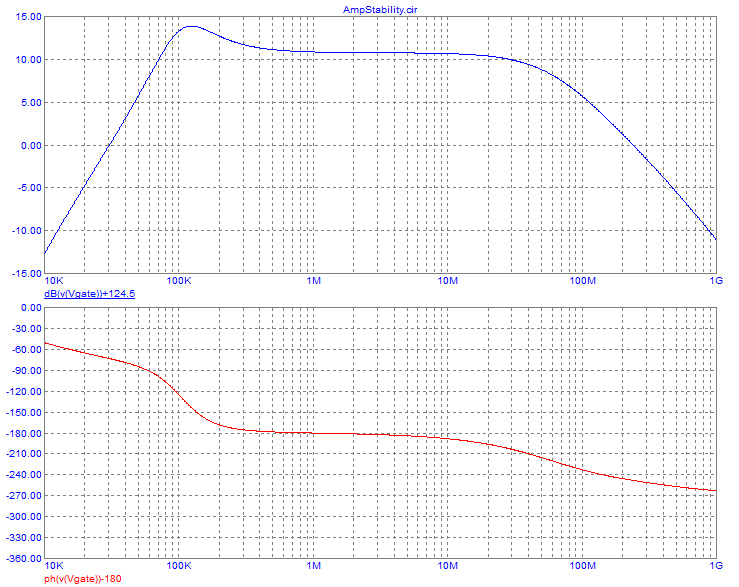

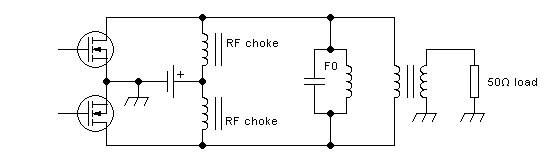

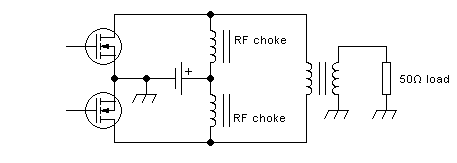

The power supply is now injected through two completely separate RF

chokes. They have absolutely no coupling between them, as shown

schematically by drawing two separate magnetic cores. Each of these RF

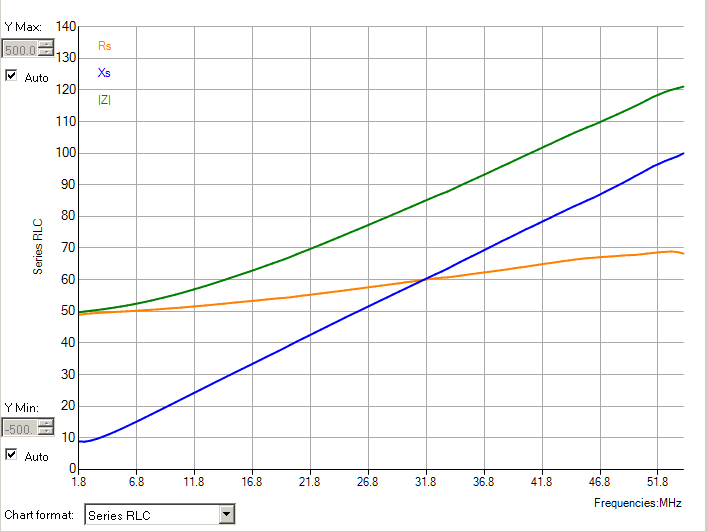

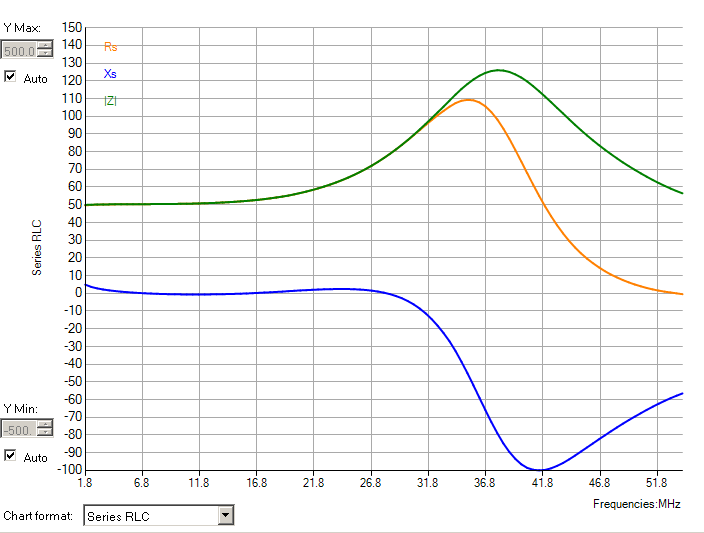

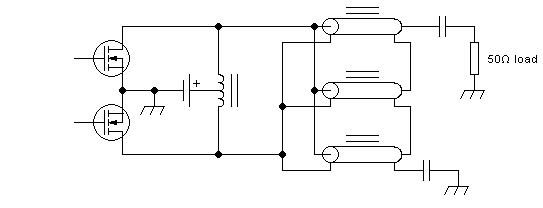

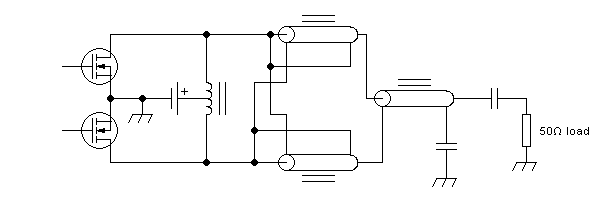

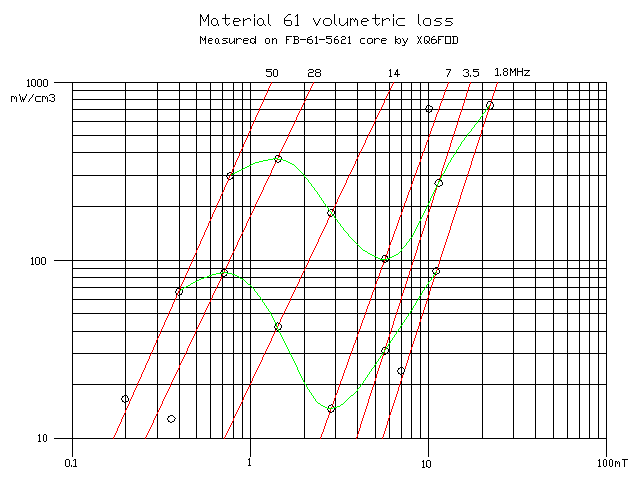

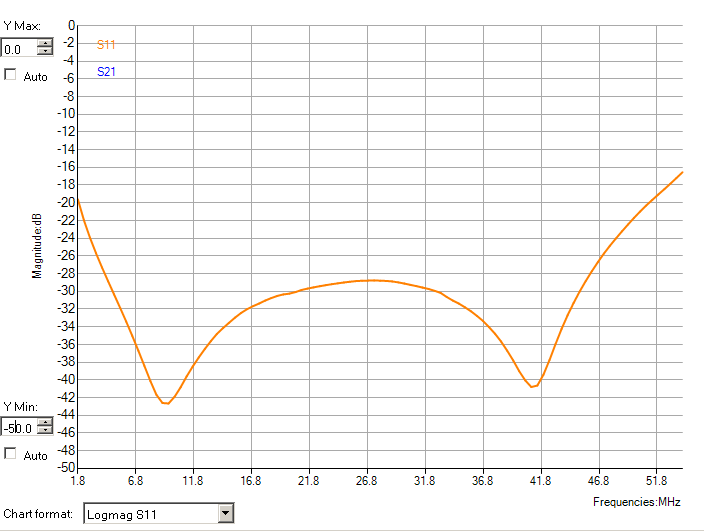

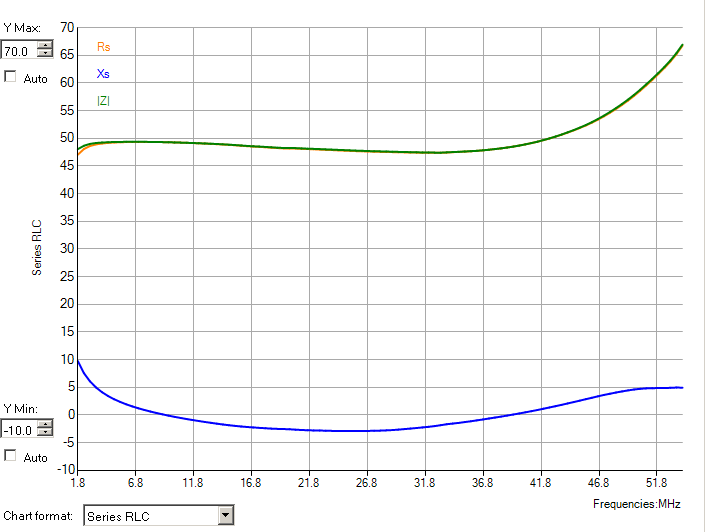

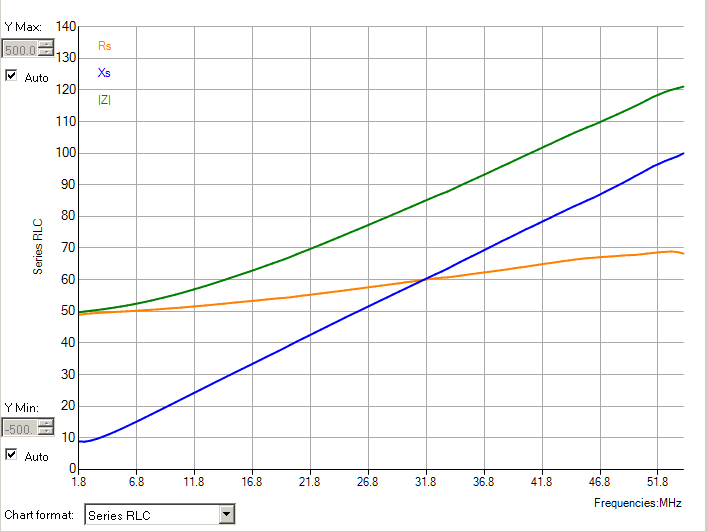

chokes will force a constant current over the entire RF cycle. The